A recurrentneural network(RNN)是一种深入学习网络结构,它使用过去的信息来提高网络对当前和未来输入的性能。是什么让RNN唯一的是网络包含隐藏状态和循环。循环结构允许网络存储隐藏状态的过去信息并在序列上运行。

这se features of recurrent neural networks make them well suited for solving a variety of problems with sequential data of varying length such as:

- 自然语言处理

- Signal classification

- Video analysis

Unrolling a single cell of an RNN, showing how information moves through the network for a data sequence. Inputs are acted on by the hidden state of the cell to produce the output, and the hidden state is passed to the next time step.

How does the RNN know how to apply the past information to the current input? The network has two sets of weights, one for the hidden state vector and one for the inputs. During training, the network learns weights for both the inputs and the hidden state. When implemented, the output is based on the current input, as well as the hidden state, which is based on previous inputs.

LSTM

In practice, simple RNNs experience a problem with learning longer-term dependencies. RNNs are commonly trained through backpropagation, where they can experience either a ‘vanishing’ or ‘exploding’ gradient problem. These problem cause the network weights to either become very small or very large, limiting the effectiveness of learning the long-term relationships.

一种克服这个问题的特殊类型的复发性神经网络是long short-term memory(LSTM) network. LSTM networks use additional gates to control what information in the hidden cell makes it to the output and the next hidden state. This allows the network to more effectively learn long-term relationships in the data. LSTMs are a commonly implemented type of RNN.

Comparison of RNN (left) and LSTM network (right)

MATLAB®具有全套功能和功能,可以使用文本,图像,信号和时间序列数据训练和实现LSTM网络。下一节将探索RNN和使用MATLAB的一些示例的应用。

Applications of RNNs

Natural Language Processing

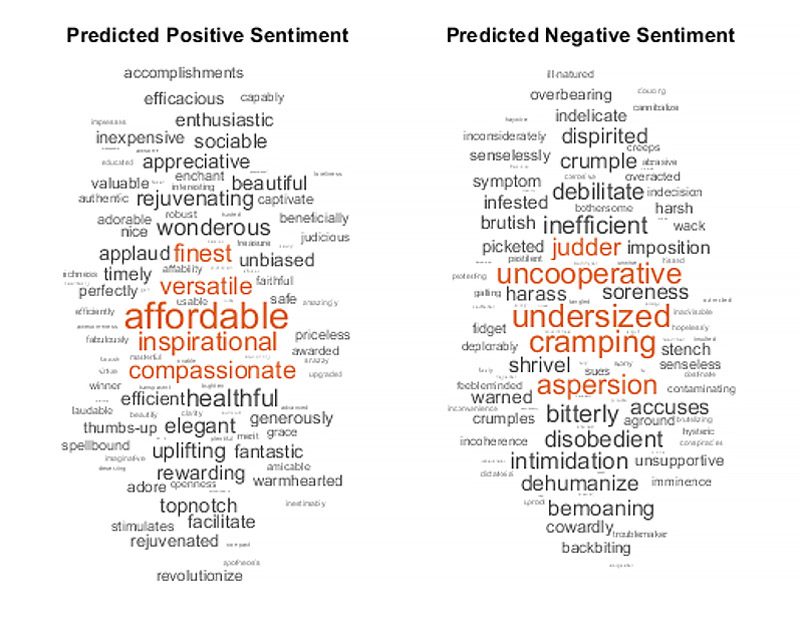

语言是自然顺序的,文本的长度变化。这使得一个很好的工具来解决这一领域的问题,因为他们可以学会在句子中的语境中展示单词。一个例子包括情绪分析, a method for categorizing the meaning of words and phrases. Machine translation, or the use of an algorithm to translate between languages, is another common application. Words first need to be converted from text data into numeric sequences. An effective way of doing this is aword embedding层。Word Embeddings地图单词分为数字向量。这example下面使用Word Embeddings培训一个词情意分类器,使用MATLAB WordCloud功能显示结果。

Sentiment analysis results in MATLAB. The word cloud displays the results of the training process so the classifier can determine the sentiment of new groups of text.

在另一个分类器示例,Matlab使用RNN来对文本数据进行分类以确定制造失败的类型。matlab也用于a机器翻译例培训网络以了解罗马数字。

信号分类

Signals are another example of naturally sequential data, as they are often collected from sensors over time. It is useful to automatically classify signals, as this can decrease the manual time needed for large datasets or allow classification in real time. Raw signal data can be fed into deep networks or pre-processed to focus on other features such as frequency components. Feature extraction can greatly improve network performance, as in anexample with electrical heart signals。以下是A.exampleusing raw signal data in an RNN.

Classifying sensor data with an LSTM in MATLAB.

Video Analysis

RNNs work well for videos because videos are essentially a sequence of images. Similar to working with signals, it helps to do feature extraction before feeding the sequence into the RNN. In thisexample, a pretrained GoogleNet model (aconvolutional neural network) is used for feature extraction on each frame. You can see the network architecture below.

使用LSTM对视频进行分类的基本架构。