RL代理人

Reinforcement learning agent

- Library:

强化学习太lbox

描述

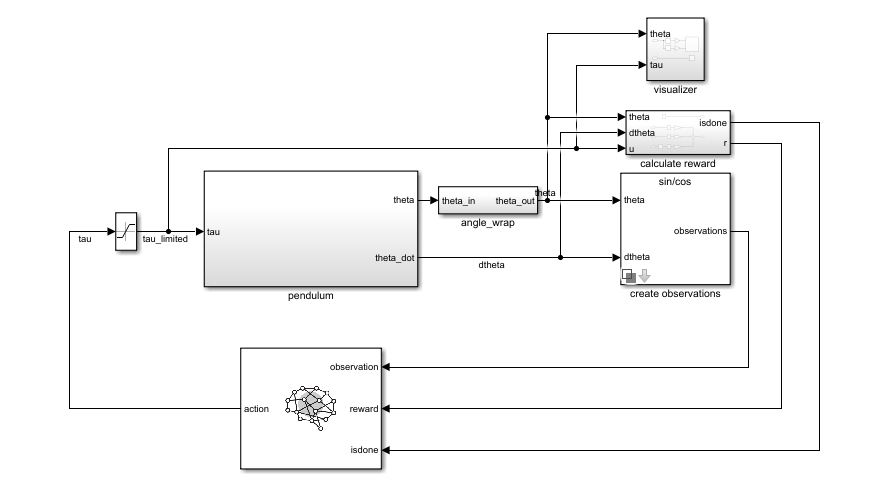

Use theRL代理人block to simulate and train a reinforcement learning agent in Simulink®。您将块与存储在MATLAB中的代理相关联®workspace or a data dictionary as an agent object such as anrlACAgent要么rlDDPGAgentobject. You connect the block so that it receives an observation and a computed reward. For instance, consider the following block diagram of theRlsimplepentulummodel.model.

The观察输入端口RL代理人block receives a signal that is derived from the instantaneous angle and angular velocity of the pendulum. The奖励port receives a reward calculated from the same two values and the applied action. You configure the observations and reward computations that are appropriate to your system.

The block uses the agent to generate an action based on the observation and reward you provide. Connect theaction输出端口为系统适当输入。例如,在Rlsimplepentulummodel.,actionport is a torque applied to the pendulum system. For more information about this model, seeTrain DQN Agent to Swing Up and Balance Pendulum。

To train a reinforcement learning agent in Simulink, you generate an environment from the Simulink model. You then create and configure the agent for training against that environment. For more information, see为强化学习创建金宝appSimulink环境。When you calltrainusing the environment,trainsimulates the model and updates the agent associated with the block.