使用并行计算的车道保持辅助列车DQN代理

此示例显示如何在Simulink®使用并行培训培训用于Lane保持辅助(LKA)的深度Q学习网络(DQN)代理。金宝app有关在不使用并行培训的情况下展示如何训练代理的示例,请参阅培训DQN Agent for Lane保持辅助。

有关DQN代理商的更多信息,请参阅深度Q-Network代理商。有关在Matlab®中列出DQN代理的示例,请参阅培训DQN Agent来平衡车杆系统。

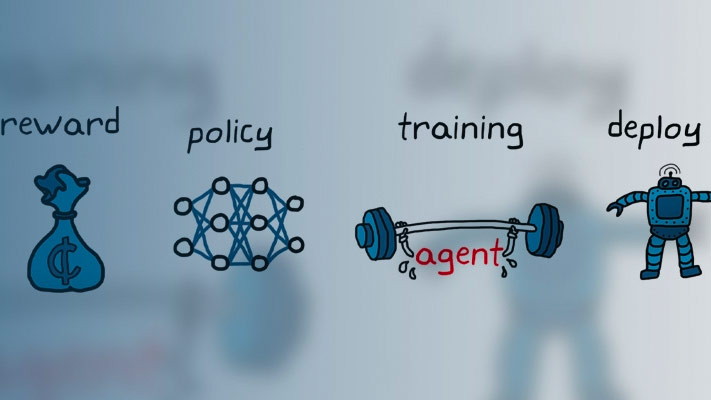

DQN Parallel Training Overview

In a DQN agent, each worker generates new experiences from its copy of the agent and the environment. After everyN步骤,工作者向主机代理发送体验。主机代理如下更新其参数。

For asynchronous training, the host agent learns from received experiences without waiting for all workers to send experiences, and sends the updated parameters back to the worker that provided the experiences. Then, the worker continues to generate experiences from its environment using the updated parameters.

For synchronous training, the host agent waits to receive experiences from all of the workers and learns from these experiences. The host then sends updated parameters to all the workers at the same time. Then, all workers continue to generate experiences using the updated parameters.

Simulink Model for Ego Car

The reinforcement learning environment for this example is a simple bicycle model for ego vehicle dynamics. The training goal is to keep the ego vehicle traveling along the centerline of the lanes by adjusting the front steering angle. This example uses the same vehicle model as培训DQN Agent for Lane保持辅助。

m = 1575;% total vehicle mass (kg)IZ = 2875;% yaw moment of inertia (mNs^2)lf = 1.2;从重心到前轮胎的%纵向距离(m)lr = 1.6;从重心到后轮胎的%纵向距离(m)Cf = 19000;前轮胎的%转弯刚度(n / rad)Cr = 33000;后轮胎的%转弯刚度(n / rad)Vx = 15;% longitudinal velocity (m/s)

定义采样时间TS.和simulation durationTin seconds.

TS.= 0.1; T = 15;

党的系统的输出前指导angle of the ego car. To simulate the physical steering limits of the ego car, constrain the steering angle to the range[–0.5,0.5]rad。

u_min = -0.5; u_max = 0.5;

道路的曲率由恒定的0.001(

)。横向偏差的初始值是0.2m and the initial value for the relative yaw angle is–0.1rad。

rho = 0.001;e1_initial = 0.2;e2_initial = -0.1;

打开模型。

mdl ='rlLKAMdl';Open_System(MDL)AppletBlk = [MDL'/RL Agent'];

对于此模型:

The steering-angle action signal from the agent to the environment is from –15 degrees to 15 degrees.

The observations from the environment are the lateral deviation ,相对横摆角 , their derivatives 和 , and their integrals 和 。

The simulation is terminated when the lateral deviation

The reward ,每次都提供 ,是

哪里 is the control input from the previous time step 。

创建环境界面

为自我车辆创建强化学习环境界面。

Define the observation information.

观察invfo = rlnumericspec([6 1],'lowerimit',-inf *那些(6,1),'UpperLimit',inf*ones(6,1)); observationInfo.Name ='观察';observationInfo.Description =“关于横向偏差和相对偏航角的信息”;

Define the action information.

ActionInfo = rlfinitesetspec(( - 15:15)* pi / 180);ActionInfo.name =.'steering';

Create the environment interface.

env = rlSimulinkEnv(mdl,agentblk,observationInfo,actionInfo);

The interface has a discrete action space where the agent can apply one of 31 possible steering angles from –15 degrees to 15 degrees. The observation is the six-dimensional vector containing lateral deviation, relative yaw angle, as well as their derivatives and integrals with respect to time.

要定义横向偏差和相对偏航角的初始条件,请使用匿名功能手柄指定环境复位功能。localresetfcn.,这是定义在这次考试的结束ple, randomizes the initial lateral deviation and relative yaw angle.

env.ResetFcn = @(in)localResetFcn(in);

Fix the random generator seed for reproducibility.

RNG(0)

Create DQN Agent

DQN代理可以使用多输出Q值评分近似器,这通常更有效。多输出近似器具有作为输出的输入和状态动作值的观察结果。每个输出元件代表从观察输入所示的状态采取相应的离散动作的预期累积长期奖励。

To create the critic, first create a deep neural network with one input (the six-dimensional observed state) and one output vector with 31 elements (evenly spaced steering angles from -15 to 15 degrees). For more information on creating a deep neural network value function representation, see创建策略和值函数表示。

nI = observationInfo.Dimension(1);% number of inputs (6)nL = 120;%神经元数量no = numel(afticeinfo.elements);% number of outputs (31)dnn = [ featureInputLayer(nI,'正常化','none','Name','州')全连接列(NL,'Name','fc1') reluLayer('Name','relu1')全连接列(NL,'Name','fc2') reluLayer('Name','relu2') fullyConnectedLayer(nO,'Name','fc3')];

View the network configuration.

figure plot(layerGraph(dnn))

Specify options for the critic representation usingrlRepresentationOptions。

QuandOptions = rlrepresentationOptions('LearnRate',1e-4,'GradientThreshold',1,'L2RegularizationFactor',1e-4);

使用指定的深度神经网络和选项创建批读表示。您还必须指定从环境界面获取的批评者的操作和观察信息。有关更多信息,请参阅rlqvalueerepresentation。

critic = rlQValueRepresentation(dnn,observationInfo,actionInfo,'观察',{'州'},criticOptions);

要创建DQN代理,请首先指定DQN代理选项使用rldqnagentoptions.。

agentOpts = rlDQNAgentOptions(......'采样时间',ts,......'UseDoubleDQN',真正,......'targetsmoothfactor',1e-3,......'DiscountFactor',0.99,......'ExperienceBufferLength',1e6,......'minibatchsize',256); agentOpts.EpsilonGreedyExploration.EpsilonDecay = 1e-4;

然后使用指定的批评表示和代理选项创建DQN代理。有关更多信息,请参阅rlDQNAgent。

agent = rlDQNAgent(critic,agentOpts);

训练Options

To train the agent, first specify the training options. For this example, use the following options.

Run each training for at most

10000剧集,每一集最多持久ceil(T/Ts)time steps.Display the training progress in the Episode Manager dialog box only (set the

绘图和Verboseoptions accordingly).Stop training when the episode reward reaches

-1。Save a copy of the agent for each episode where the cumulative reward is greater than 100.

有关更多信息,请参阅rltringOptions.。

maxepisodes = 10000; maxsteps = ceil(T/Ts); trainOpts = rlTrainingOptions(......'MaxEpisodes',maxepisodes,......'MaxStepsPerEpisode',maxsteps,......'verbose',假,......“阴谋”,'培训 - 进步',......'StopTrainingCriteria','EpisodeReward',......'stoptriningvalue', -1,......'SaveAgentCriteria','EpisodeReward',......'SaveAgentValue',100);

Parallel Training Options

To train the agent in parallel, specify the following training options.

设定

UseParalleloption to真正。通过设置培训代理

ParallelizationOptions.Modeoption to“异步”。After every 30 steps, each worker sends experiences to the host.

DQN代理需要工人发送“

经验“至the host.

trainOpts.UseParallel = true; trainOpts.ParallelizationOptions.Mode =“异步”;trainOpts.ParallelizationOptions.DataToSendFromWorkers =“经验”;trainOpts.ParallelizationOptions.StepsUntilDataIsSent = 32;

有关更多信息,请参阅rltringOptions.。

Train Agent

Train the agent using thetrain功能。训练the agent is a computationally intensive process that takes several minutes to complete. To save time while running this example, load a pretrained agent by settingdoTraining至false。训练代理人,套装doTraining至真正。由于并行培训的随机性,您可以期待下面的图中的不同培训结果。该情节显示了四名工人培训的结果。

dotraining = false;ifdoTraining%训练代理人。trainingStats = train(agent,env,trainOpts);else%负载净化代理。加载('SimulinkLKADQNParallel.mat','代理')end

Simulate DQN Agent

To validate the performance of the trained agent, uncomment the following two lines and simulate the agent within the environment. For more information on agent simulation, seerlSimulationOptions和sim。

% simOptions = rlSimulationOptions('MaxSteps',maxsteps);% experience = sim(env,agent,simOptions);

To demonstrate the trained agent using deterministic initial conditions, simulate the model in Simulink.

e1_initial = -0.4;e2_initial = 0.2;SIM(MDL)

As shown below, the lateral error (middle plot) and relative yaw angle (bottom plot) are both driven to zero. The vehicle starts from off centerline (–0.4 m) and nonzero yaw angle error (0.2 rad). The LKA enables the ego car to travel along the centerline after 2.5 seconds. The steering angle (top plot) shows that the controller reaches steady state after 2 seconds.

Local Function

功能在= localresetfcn(in)% reset在= setVariable(在,'e1_initial',0.5 *( - 1 + 2 * rand));横向偏差的%随机值在= setVariable(在,'e2_initial', 0.1*(-1+2*rand));% random value for relative yaw angleend