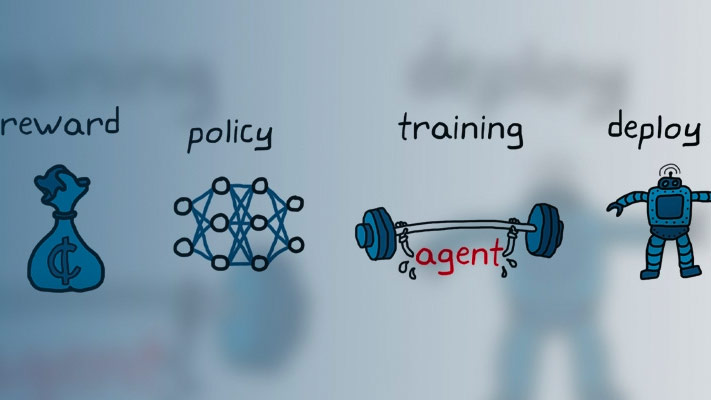

Deploy Trained Reinforcement Learning Policies

Once you train a reinforcement learning agent, you can generate code to deploy the optimal policy. You can generate:

CUDA®code for deep neural network policies using GPU Coder™

C/C++ code for table, deep neural network, or linear basis function policies usingMATLAB®编码器™

Code generation is supported for agents using feedforward neural networks in any of the input paths, provided that all the used layers are supported. Code generation is not supported for continuous actions PG, AC, PPO, and SAC agents using a recurrent neural network (RNN).

有关培训加固学习代理商的更多信息,请参阅Train Reinforcement Learning Agents。

要创建基于给定观察的策略评估功能,请使用生成policyfunction.command. This command generates a MATLAB script, which contains the policy evaluation function, and a MAT-file, which contains the optimal policy data.

You can generate code to deploy this policy function using GPU Coder orMATLAB Coder。

使用代码使用GPU编码器

If your trained optimal policy uses a deep neural network, you can generate CUDA code for the policy using GPU Coder. For more information on supported GPUs seeGPU通金宝app过发布支持(Parallel Computing Toolbox)。有几种必需的,推荐的先决条件产品用于为深神经网络生成CUDA代码。下载188bet金宝搏有关更多信息,请参阅Installing Prerequisite Products(GPU编码器)和Setting Up the Prerequisite Products(GPU编码器)。

并非所有深度神经网络层都支持GPU代码生成。金宝app有关支持的图层列表,请参阅金宝app金宝app支持的网络,图层和类(GPU编码器)。For more information and examples on GPU code generation, see与GPU编码器深入学习(GPU编码器)。

GenerateCUDACode for Deep Neural Network Policy

例如,为培训的策略梯度代理生成GPU代码火车PG代理可以平衡车杆系统。

加载培训的代理。

load('matlabcartpolepg.mat','agent')

Create a policy evaluation function for this agent.

GeneratePolicyFunction(代理)

This command creates theequationpolicy.m.文件,包含策略函数,以及agentData.matfile, which contains the trained deep neural network actor. For a given observation, the policy function evaluates a probability for each potential action using the actor network. Then, the policy function randomly selects an action based on these probabilities.

您可以使用GPU编码器为此网络生成代码。例如,您可以生成CUDA兼容MEX函数。

Configure thecodegen功能创建CUDA兼容C ++ MEX功能。

cfg = coder.gpuConfig('mex');cfg.targetlang ='C++';cfg.deeplearningconfig = coder.deeplearningconfig('cudnn');

设置策略评估函数的示例输入值。要找到观察维度,请使用getobservationInfo.function. In this case, the observations are in a four-element vector.

argstr ='{ONEO(4,1)}';

Generate code using thecodegenfunction.

codegen('-config','cfg','equatepolicy','-args',argstr,'-报告');

This command generates the MEX functionevaluatepolicy_mex.。

使用代码使用MATLAB编码器

您可以使用的是表,深神经网络或线性基础函数策略的C / C ++代码MATLAB Coder。

UsingMATLAB Coder,您可以生成:

C/C++ code for policies that use Q tables, value tables, or linear basis functions. For more information on general C/C++ code generation, see生成代码(MATLAB编码器)。

C++ code for policies that use deep neural networks. Note that code generation is not supported for continuous actions PG, AC, PPO, and SAC agents using a recurrent neural network (RNN). For a list of supported layers, seeNetworks and Layers Supported for Code Generation(MATLAB编码器)。有关更多信息,请参阅Prerequisites for Deep Learning with MATLAB Coder(MATLAB编码器)和Deep Learning with MATLAB Coder(MATLAB编码器)。

Generate C Code for Deep Neural Network Policy without using any Third-Party Library

As an example, generate C code without dependencies on third-party libraries for the policy gradient agent trained in火车PG代理可以平衡车杆系统。

加载培训的代理。

load('matlabcartpolepg.mat','agent')

Create a policy evaluation function for this agent.

GeneratePolicyFunction(代理)

This command creates theequationpolicy.m.文件,包含策略函数,以及agentData.matfile, which contains the trained deep neural network actor. For a given observation, the policy function evaluates a probability for each potential action using the actor network. Then, the policy function randomly selects an action based on these probabilities.

Configure thecodegen生成适合构建MEX文件的代码的功能。

cfg = coder.config('mex');

在配置对象上,将目标语言设置为C ++,并设置DeeplearningConfig到 'none'。This option generates code without using any third-party library.

cfg.targetlang ='C';cfg.deeplearningconfig = coder.deeplearningconfig('none');

设置策略评估函数的示例输入值。要找到观察维度,请使用getobservationInfo.function. In this case, the observations are in a four-element vector.

argstr ='{ONEO(4,1)}';

Generate code using thecodegenfunction.

codegen('-config','cfg','equatepolicy','-args',argstr,'-报告');

This command generates the C++ code for the policy gradient agent containing the deep neural network actor.

使用第三方库生成用于深度神经网络策略的C ++代码

例如,为培训的策略梯度代理生成C ++代码火车PG代理可以平衡车杆系统using the Intel Math Kernel Library for Deep Neural Networks (MKL-DNN).

加载培训的代理。

load('matlabcartpolepg.mat','agent')

Create a policy evaluation function for this agent.

GeneratePolicyFunction(代理)

This command creates theequationpolicy.m.文件,包含策略函数,以及agentData.matfile, which contains the trained deep neural network actor. For a given observation, the policy function evaluates a probability for each potential action using the actor network. Then, the policy function randomly selects an action based on these probabilities.

Configure thecodegen生成适合构建MEX文件的代码的功能。

cfg = coder.config('mex');

在配置对象上,将目标语言设置为C ++,并设置DeeplearningConfigto the target library 'Mkldnn.'。此选项使用Intel Math Kernel库进行深度神经网络(Intel MKL-DNN)生成代码。

cfg.targetlang ='C++';cfg.deeplearningconfig = coder.deeplearningconfig('mkldnn');

设置策略评估函数的示例输入值。要找到观察维度,请使用getobservationInfo.function. In this case, the observations are in a four-element vector.

argstr ='{ONEO(4,1)}';

Generate code using thecodegenfunction.

codegen('-config','cfg','equatepolicy','-args',argstr,'-报告');

This command generates the C++ code for the policy gradient agent containing the deep neural network actor.

Generate C Code for Q Table Policy

As an example, generate C code for the Q-learning agent trained in在基本网格世界中列车加固学习代理。

加载培训的代理。

load('basicGWQAgent.mat','qAgent')

Create a policy evaluation function for this agent.

生成policyfunction.(qAgent)

This command creates theequationpolicy.m.文件,包含策略函数,以及agentData.mat文件,包含培训的Q表值函数。对于给定的观察,策略函数使用Q表查找每个潜在动作的值函数。然后,策略函数选择值函数最大的操作。

设置策略评估函数的示例输入值。要找到观察维度,请使用getobservationInfo.function. In this case, there is a single one dimensional observation (belonging to a discrete set of possible values).

argstr ='{[1]}';

Configure thecodegenfunction to generate embeddable C code suitable for targeting a static library, and set the output folder toBuildFolder.。

cfg = coder.config('lib');outFolder ='buildfolder';

使用C代码使用codegenfunction.

codegen('-C','-d',外档,'-config','cfg',。。。'equatepolicy','-args',argstr,'-报告');