sim

Simulate trained reinforcement learning agents within specified environment

描述

例子

模拟加强学习环境

Simulate a reinforcement learning environment with an agent configured for that environment. For this example, load an environment and agent that are already configured. The environment is a discrete cart-pole environment created withrlPredefinedEnv。代理人是政策梯度(rlpgagent) 代理人。有关此示例中使用的环境和代理的更多信息,请参见Train PG Agent to Balance Cart-Pole System。

rng(0)% for reproducibilityloadrlsimexample.matenv

env = CartPoleDiscreteAction with properties: Gravity: 9.8000 MassCart: 1 MassPole: 0.1000 Length: 0.5000 MaxForce: 10 Ts: 0.0200 ThetaThresholdRadians: 0.2094 XThreshold: 2.4000 RewardForNotFalling: 1 PenaltyForFalling: -5 State: [4×1 double]

agent

agent = rlPGAgent with properties: AgentOptions: [1×1 rl.option.rlPGAgentOptions] UseExplorationPolicy: 1 ObservationInfo: [1×1 rl.util.rlNumericSpec] ActionInfo: [1×1 rl.util.rlFiniteSetSpec] SampleTime: 0.1000

通常,您会使用trainand simulate the environment to test the performance of the trained agent. For this example, simulate the environment using the agent you loaded. Configure simulation options, specifying that the simulation run for 100 steps.

simopts= rlSimulationOptions(“ maxsteps',100);

对于此示例中使用的预定义的卡特杆环境。您可以使用plotto generate a visualization of the cart-pole system. When you simulate the environment, this plot updates automatically so that you can watch the system evolve during the simulation.

plot(env)

Simulate the environment.

经验=sim(env,agent,simOpts)

经验=带有字段的结构:Observation: [1×1 struct] Action: [1×1 struct] Reward: [1×1 timeseries] IsDone: [1×1 timeseries] SimulationInfo: [1×1 struct]

输出结构经验记录从环境中收集的观察结果,动作和奖励以及模拟过程中收集的其他数据。每个字段都包含一个timeseries对象或结构timeseries数据对象。例如,经验是atimeseries在模拟的每个步骤中包含代理在卡车孔系统上施加的动作。

经验

ans =带有字段的结构:CartPoleAction:[1×1个时间]

使用多个代理模拟模金宝app拟环境

Simulate an environment created for the Simulink® model used in the example培训多个代理商执行协作任务, using the agents trained in that example.

Load the agents in the MATLAB® workspace.

loadrlCollaborativeTaskAgents

Create an environment for therlCollaborativeTask金宝appSimulink®型号,它具有两个代理块。由于两个块使用的代理(agentAandagentB)已经在工作空间中了,您无需传递其观察和行动规范即可创建环境。

env = rl金宝appSimulinkenv('rlCollaborativeTask',[[“ rlcollaborativetask/agent a”,“ rlcollaborativetask/agent b”]);

加载由rlCollaborativeTaskSimulink® model to run.

rlCollaborativeTaskParams

Simulate the agents against the environment, saving the experiences inxpr。

XPR = SIM(Env,[Agenta AgentB]);

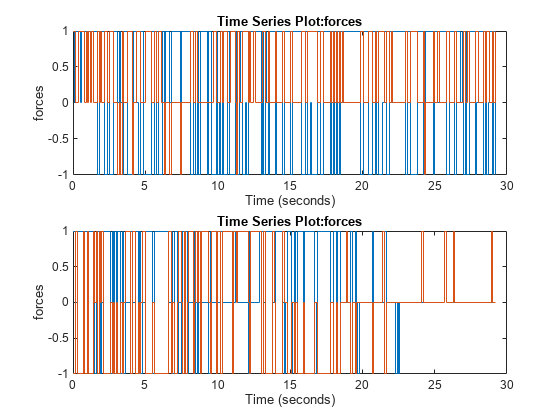

Plot actions of both agents.

次要情节(2,1,1);情节(xpr (1) .Action.forces)次要情节(2,1,2); plot(xpr(2).Action.forces)

输入参数

env—环境

reinforcement learning environment object

代理行动的环境,被指定为以下强化学习环境对象之一:

预定义的MATLAB®or Simulink®使用的环境使用

rlPredefinedEnv。这种环境不同时支持培训多个代理。金宝app您创建的自定义MATLAB环境,例如

rlFunctionEnvorrlcreateenvtemplate。这种环境不同时支持培训多个代理。金宝app您使用的自定义模拟金宝app环境使用

rlSimulinkEnv。这种环境同时支持培训多个代理。金宝app

有关创建和配置环境的更多信息,请参见:

什么时候env是a Simulink environment, callingsim编译并模拟与环境关联的模型。

代理商—Agents

reinforcement learning agent object|代理对象的数组

模拟的代理,将其指定为强化学习代理对象,例如rlACAgentorrlDDPGAgent, or as an array of such objects.

如果env是a multi-agent environment created withrlSimulinkEnv, specify agents as an array. The order of the agents in the array must match the agent order used to createenv。对于MATLAB环境,不支持多代理模拟。金宝app

有关如何创建和配置用于强化学习的代理的更多信息,请参见强化学习者。

simopts—Simulation options

rlSimulationOptionsobject

仿真选项,指定为rlSimulationOptionsobject. Use this argument to specify options such as:

每个仿真的步骤数

Number of simulations to run

For details, seerlSimulationOptions。

Output Arguments

经验— Simulation results

结构|structure array

仿真结果,作为结构或结构阵列返回。数组中的行数等于仿真数量NumSimulationsoption ofrlSimulationOptions。数组中的列数是代理的数量。每个字段经验结构如下。

Observation— Observations

structure

Observations collected from the environment, returned as a structure with fields corresponding to the observations specified in the environment. Each field contains atimeseries长度N+ 1,哪里N是模拟步骤的数量。

To obtain the current observation and the next observation for a given simulation step, use code such as the following, assuming one of the fields ofObservation是obs1。

Obs = getSamples(experience.Observation.obs1,1:N); NextObs = getSamples(experience.Observation.obs1,2:N+1);

simto generate experiences for training.

Action- 行动

structure

由代理计算出的操作,返回为结构,该结构具有与环境中指定的动作信号相对应的字段。每个字段都包含一个timeseries长度N, 在哪里N是模拟步骤的数量。

报酬— Rewards

timeseries

在模拟中的每个步骤中的奖励,返回timeseries长度N, 在哪里N是模拟步骤的数量。

IsDone— Flag indicating termination of episode

timeseries

国旗表明终止啊f the episode, returned as atimeseries标量逻辑信号。根据您在配置环境时指定的情节终止的条件,每个步骤都设置了此标志。当环境将此标志设置为1时,仿真将终止。

模拟- 模拟期间收集的信息

结构|向量的Simulink.SimulationOutputobjects

Information collected during simulation, returned as one of the following:

对于MATLAB环境,一个包含字段的结构

SimulationError。该结构包含模拟过程中发生的任何错误。For Simulink environments, a

Simulink.SimulationOutputobject containing simulation data. Recorded data includes any signals and states that the model is configured to log, simulation metadata, and any errors that occurred.

Version History

matlab命令

You clicked a link that corresponds to this MATLAB command:

通过在MATLAB命令窗口中输入该命令。Web浏览器不支持MATLAB命令。金宝app

选择一个网站

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select:。

您还可以从以下列表中选择一个网站:

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

美洲

- América Latina(Español)

- 加拿大(英语)

- 美国(英语)