深度学习HDL工具箱

Prototype and deploy deep learning networks on FPGAs and SoCs

深度学习HDL工具箱™ provides functions and tools to prototype and implement deep learning networks on FPGAs and SoCs. It provides pre-built bitstreams for running a variety of deep learning networks on supported Xilinx®and Intel®FPGA和SOC设备。分析和估计工具可让您通过探索设计,性能和资源利用权衡来自定义深度学习网络。

深度学习HDL工具箱enables you to customize the hardware implementation of your deep learning network and generate portable, synthesizable Verilog®and VHDL®与HDL代码部署在FPGA(编码器™nd Simulink®)。

开始:

Programmable Deep Learning Processor

The toolbox includes a deep learning processor that features generic convolution and fully-connected layers controlled by scheduling logic. This deep learning processor performs FPGA-based inferencing of networks developed using深度学习工具箱™。高带宽内存界面速度的存储器转移层和权重数据。

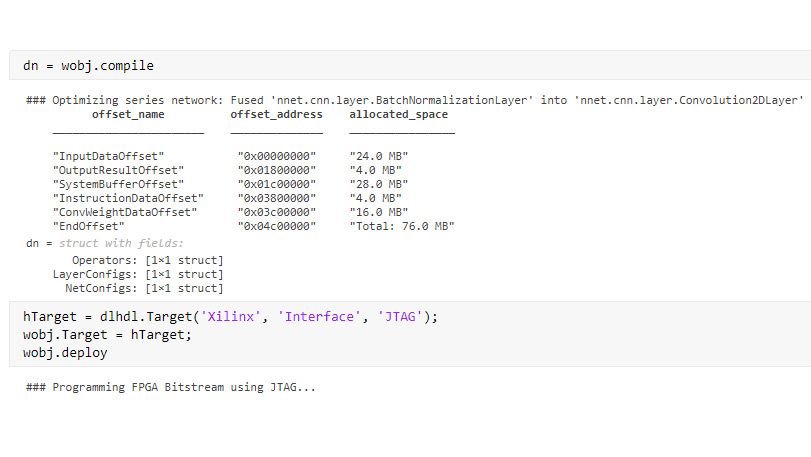

汇编和部署

Compile your deep learning network into a set of instructions to be run by the deep learning processor. Deploy to the FPGA and run prediction while capturing actual on-device performance metrics.

Get Started with Prebuilt Bitstreams

在没有FPGA编程的情况下,您可以使用可用的Bitstreams来制作网络,用于流行的FPGA开发套件。

Creating a Network for Deployment

Begin by using Deep Learning Toolbox to design, train, and analyze your deep learning network for tasks such as object detection or classification. You can also start by importing a trained network or layers from other frameworks.

Deploying Your Network to the FPGA

一旦您拥有训练有素的网络,请使用deploycommand to program the FPGA with the deep learning processor along with the Ethernet or JTAG interface. Then use thecompilecommand to generate a set of instructions for your trained network without reprogramming the FPGA.

作为MATLAB应用程序的一部分运行基于FPGA的推断

Run your entire application in MATLAB®, including your test bench, preprocessing and post-processing algorithms, and the FPGA-based deep learning inferencing. A single MATLAB command,预测,在FPGA上执行推断,并将结果返回到MATLAB工作区。

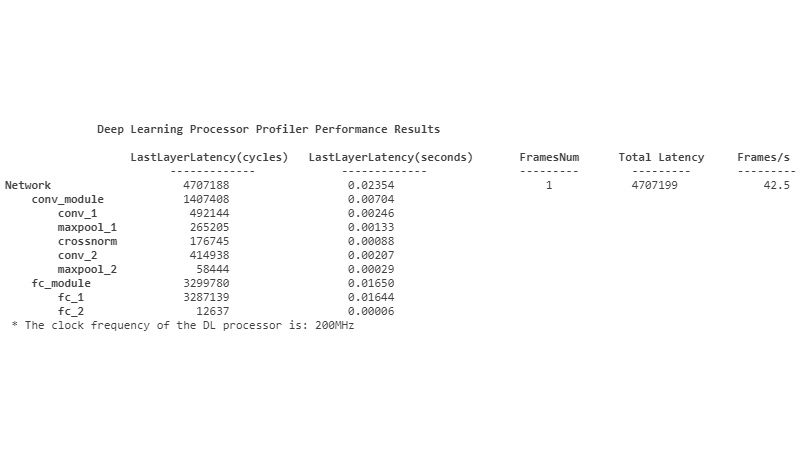

配置文件FPGA推断

Measure layer-level latency as you run predictions on the FPGA to find performance bottlenecks.

Tune the Network Design

使用配置文件指标,使用深度学习工具箱调整网络配置。例如,使用深网设计器添加图层,删除层或创建新连接。

深度学习量化

通过将深度学习网络量化为定点表示来减少资源利用率。使用模型量化库支持软件包分析准确性和资源利用率之间的权衡。金宝app

自定义深度学习处理器配置

指定用于实现深度学习处理器的硬件体系结构选项,例如并行线程的数量或最大图层大小。

Generate Synthesizable RTL

Use HDL Coder to generate synthesizable RTL from the deep learning processor for use in a variety of implementation workflows and devices. Reuse the same deep learning processor for prototype and production deployment.

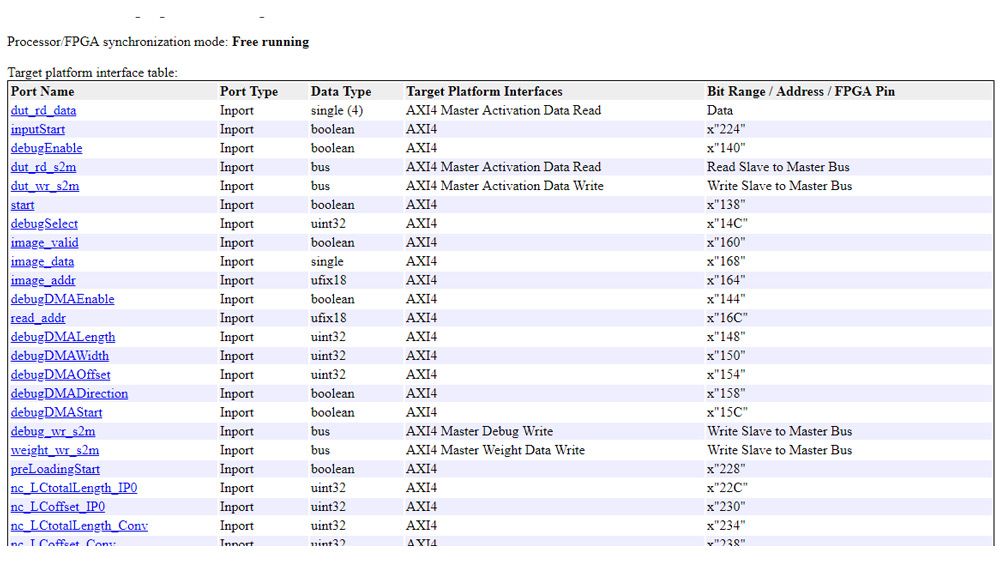

Generate IP Cores for Integration

当HDL编码器从深度学习处理器中生成RTL时,它还生成具有标准AXI接口的IP核心,以集成到您的SOC参考设计中。