基于深度学习的视频和光流数据活动识别gydF4y2Ba

这个例子展示了如何训练一个膨胀的3-D (I3D)双流卷积神经网络来使用来自视频的RGB和光流数据进行活动识别gydF4y2Ba[1]gydF4y2Ba.gydF4y2Ba

基于视觉的活动识别包括使用一组视频帧预测物体的动作,如行走、游泳或坐着。视频活动识别具有人机交互、机器人学习、异常检测、监控、物体检测等多种应用。例如,在线预测来自多个摄像机的传入视频的多个动作对机器人学习很重要。与图像分类相比,使用视频进行动作识别具有挑战性,因为视频数据集中的噪声标签、视频中演员可以执行的各种动作严重不平衡,以及在大型视频数据集上进行预训练时计算效率低下。一些深度学习技术,如I3D双流卷积网络gydF4y2Ba[1]gydF4y2Ba,通过对大型图像分类数据集进行预训练,表现出了更好的性能。gydF4y2Ba

加载数据gydF4y2Ba

这个示例使用gydF4y2BaHMDB51gydF4y2Ba数据集。使用gydF4y2BadownloadHMDB51gydF4y2Ba金宝app将HMDB51数据集下载到一个名为gydF4y2Bahmdb51gydF4y2Ba.gydF4y2Ba

downloadFolder = fullfile (tempdir,gydF4y2Ba“hmdb51”gydF4y2Ba);downloadHMDB51 (downloadFolder);gydF4y2Ba

下载完成后,解压RAR文件gydF4y2Bahmdb51_org.rargydF4y2Ba到gydF4y2Bahmdb51gydF4y2Ba文件夹中。接下来,使用gydF4y2BacheckForHMDB51FoldergydF4y2Ba金宝app支持函数(列在本示例的末尾),以确认下载和提取的文件已就位。gydF4y2Ba

allClasses = checkForHMDB51Folder (downloadFolder);gydF4y2Ba

该数据集包含约2gb的视频数据,超过51类7000个片段,例如gydF4y2Ba喝gydF4y2Ba,gydF4y2Ba运行gydF4y2Ba,gydF4y2Ba握手gydF4y2Ba.每个视频帧的高度为240像素,最小宽度为176像素。帧数从18帧到大约1000帧不等。gydF4y2Ba

为了减少训练时间,本例训练一个活动识别网络,将数据集中的51个类全部分类为5个动作类。集gydF4y2BauseAllDatagydF4y2Ba来gydF4y2Ba真正的gydF4y2Ba和51个班级一起训练gydF4y2Ba

useAllData = false;gydF4y2Ba如果gydF4y2BauseAllData类=所有类;gydF4y2Ba其他的gydF4y2Ba类= [gydF4y2Ba“吻”gydF4y2Ba,gydF4y2Ba“笑”gydF4y2Ba,gydF4y2Ba“选择”gydF4y2Ba,gydF4y2Ba“倒”gydF4y2Ba,gydF4y2Ba“俯卧撑”gydF4y2Ba];gydF4y2Ba结束gydF4y2BadataFolder = fullfile (downloadFolder,gydF4y2Ba“hmdb51_org”gydF4y2Ba);gydF4y2Ba

将数据集分割为训练网络的训练集和评估网络的测试集。将80%的数据用于训练集,其余数据用于测试集。使用gydF4y2BaimageDatastoregydF4y2Ba通过从每个标签中随机选择一定比例的文件,将基于每个标签的数据分割为训练和测试数据集。gydF4y2Ba

imd = imageDatastore (fullfile (dataFolder、类),gydF4y2Ba...gydF4y2Ba“IncludeSubfolders”gydF4y2Ba,真的,gydF4y2Ba...gydF4y2Ba“LabelSource”gydF4y2Ba,gydF4y2Ba“foldernames”gydF4y2Ba,gydF4y2Ba...gydF4y2Ba“FileExtensions”gydF4y2Ba,gydF4y2Ba“.avi”gydF4y2Ba);[trainImds, testImds] = splitEachLabel (imd, 0.8,gydF4y2Ba“随机”gydF4y2Ba);trainFilenames = trainImds.Files;testFilenames = testImds.Files;gydF4y2Ba

为了规范化网络的输入数据,MAT文件中提供了数据集的最小值和最大值gydF4y2BainputStatistics.matgydF4y2Ba,附加到本例中。要查找不同数据集的最小值和最大值,请使用gydF4y2BainputStatisticsgydF4y2Ba金宝app支持函数,列在本示例的最后。gydF4y2Ba

inputStatsFilename =gydF4y2Ba“inputStatistics.mat”gydF4y2Ba;gydF4y2Ba如果gydF4y2Ba~存在(inputStatsFilenamegydF4y2Ba“文件”gydF4y2Ba) disp (gydF4y2Ba“阅读所有的训练数据以获取输入统计数据……”gydF4y2Ba) inputStats = inputStatistics(dataFolder);gydF4y2Ba其他的gydF4y2Bad =负载(inputStatsFilename);inputStats = d.inputStats;gydF4y2Ba结束gydF4y2Ba

为培训网络创建数据存储gydF4y2Ba

创建两个gydF4y2BaFileDatastoregydF4y2Ba对象的培训和验证使用gydF4y2BacreateFileDatastoregydF4y2Ba金宝app支持函数,在本例的最后定义。每个数据存储读取一个视频文件,提供RGB数据、光流数据和相应的标签信息。gydF4y2Ba

指定数据存储每次读取的帧数。典型值为16、32、64或128。使用更多的框架有助于捕捉更多的时间信息,但需要更多的记忆来训练和预测。设置帧数为64,以平衡内存使用和性能。您可能需要根据您的系统资源降低这个值。gydF4y2Ba

numFrames = 64;gydF4y2Ba

指定数据存储要读取的帧的高度和宽度。将高度和宽度固定为相同的值可以使网络的批处理数据更容易。典型值为[112,112]、[224,224]和[256,256]。HMDB51数据集中视频帧的最小高度和宽度分别为240和176。指定[112,112]以牺牲空间信息获取更多的帧。如果你想为数据存储指定一个比最小值更大的帧大小,例如[256,256],首先使用imresize调整帧的大小。gydF4y2Ba

frameSize = [112112];gydF4y2Ba

集gydF4y2BainputSizegydF4y2Ba到gydF4y2BainputStatsgydF4y2Ba结构的读取功能gydF4y2BafileDatastoregydF4y2Ba可以读取指定的输入大小。gydF4y2Ba

inputSize = [frameSize, numFrames];inputStats。inputSize=inputSize;inputStats。类=类;gydF4y2Ba

创建两个gydF4y2BaFileDatastoregydF4y2Ba对象,一个用于训练,另一个用于验证。gydF4y2Ba

isDataForValidation = false;dsTrain = createFileDatastore (trainFilenames inputStats isDataForValidation);isDataForValidation = true;dsVal = createFileDatastore (testFilenames inputStats isDataForValidation);disp (gydF4y2Ba“培训数据大小:”gydF4y2Ba+字符串(元素个数(dsTrain.Files)))gydF4y2Ba

培训数据规模:436gydF4y2Ba

disp (gydF4y2Ba“验证数据大小:”gydF4y2Ba+字符串(元素个数(dsVal.Files)))gydF4y2Ba

验证数据量:109gydF4y2Ba

定义网络体系结构gydF4y2Ba

I3D网络gydF4y2Ba

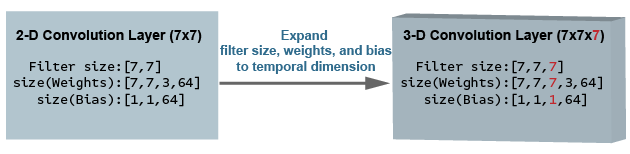

利用三维CNN是一种从视频中提取时空特征的自然方法。您可以通过扩展2d过滤器并将内核池到3d中,从预先训练的2d图像分类网络(如Inception v1或ResNet-50)创建I3D网络。该程序重用从图像分类任务中学到的权值来引导视频识别任务。gydF4y2Ba

下图是如何将二维卷积层扩展为三维卷积层的示例。膨胀包括通过增加第三个维度(时间维度)来扩大过滤器的大小、权重和偏差。gydF4y2Ba

二束I3D网络gydF4y2Ba

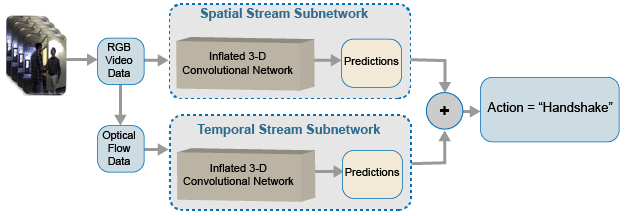

视频数据可以被认为有两部分:一个空间分量和一个时间分量。gydF4y2Ba

空间组件包括关于视频中物体的形状、纹理和颜色的信息。RGB数据包含这个信息。gydF4y2Ba

时间分量包含关于物体在帧间运动的信息,描述摄像机和场景中物体之间的重要运动。光流计算是从视频中提取时间信息的一种常用技术。gydF4y2Ba

一个双流CNN包含一个空间子网络和一个时间子网络gydF4y2Ba[2]gydF4y2Ba.在密集光流和视频数据流上训练的卷积神经网络在有限的训练数据下比在原始的堆叠RGB帧上获得更好的性能。下图展示了一个典型的双流I3D网络。gydF4y2Ba

创建双流I3D网络gydF4y2Ba

在本例中,使用GoogLeNet创建一个I3D网络,GoogLeNet是在ImageNet数据库上预先训练的网络。gydF4y2Ba

指定通道的数量为gydF4y2Ba3.gydF4y2Ba为RGB子网,和gydF4y2Ba2gydF4y2Ba用于光流子网。两个通道的光流数据是gydF4y2Ba

和gydF4y2Ba

组件的速度,gydF4y2Ba

和gydF4y2Ba

,分别。gydF4y2Ba

rgbChannels = 3;flowChannels = 2;gydF4y2Ba

得到RGB和光流数据的最小值和最大值gydF4y2BainputStatsgydF4y2Ba结构从gydF4y2BainputStatistics.matgydF4y2Ba文件。这些值是需要的gydF4y2Baimage3dInputLayergydF4y2Ba,对输入数据进行归一化。gydF4y2Ba

rgbInputSize = [frameSize, numFrames, rgbChannels]; / / rgbInputSize = [frameSize, numFrames, rgbChannels];flowInputSize = [frameSize, numFrames, flowChannels];rgbMin = inputStats.rgbMin;rgbMax = inputStats.rgbMax;oflowMin = inputStats.oflowMin (:,:, 1:2);oflowMax = inputStats.oflowMax (:,:, 1:2);rgbMin =重塑(rgbMin[1、大小(rgbMin)]);rgbMax =重塑(rgbMax[1、大小(rgbMax)]);oflowMin =重塑(oflowMin[1、大小(oflowMin)]);oflowMax =重塑(oflowMax[1、大小(oflowMax)]);gydF4y2Ba

指定用于网络培训的类的数量。gydF4y2Ba

numClasses =元素个数(类);gydF4y2Ba

创建I3D RGB和光流子网使用gydF4y2BaInflated3DgydF4y2Ba金宝app支持函数,它附加到本示例中。子网是由GoogLeNet创建的。gydF4y2Ba

cnnNet = googlenet;netRGB = Inflated3D (numClasses rgbInputSize、rgbMin rgbMax, cnnNet);netFlow = Inflated3D (numClasses flowInputSize、oflowMin oflowMax, cnnNet);gydF4y2Ba

创建一个gydF4y2BadlnetworkgydF4y2Ba对象从每个I3D网络的层图。gydF4y2Ba

dlnetRGB = dlnetwork (netRGB);dlnetFlow = dlnetwork (netFlow);gydF4y2Ba

定义模型梯度函数gydF4y2Ba

创建支持功能金宝appgydF4y2BamodelGradientsgydF4y2Ba,列在本例的最后。的gydF4y2BamodelGradientsgydF4y2Ba函数以RGB子网作为输入gydF4y2BadlnetRGBgydF4y2Ba,光流子网gydF4y2BadlnetFlowgydF4y2Ba,输入数据的一小批gydF4y2BadlRGBgydF4y2Ba和gydF4y2BadlFlowgydF4y2Ba,以及一小批地面真实标签数据gydF4y2Ba海底gydF4y2Ba.该函数返回训练损失值,损失相对于各自子网的可学习参数的梯度,以及子网的小批量精度。gydF4y2Ba

损失是通过计算每个子网络预测的交叉熵损失的平均值来计算的。网络的输出预测是每个类在0到1之间的概率。gydF4y2Ba

每个子网络的精度是通过取RGB和光流预测的平均值,并将其与输入的地面真值标签进行比较来计算的。gydF4y2Ba

指定培训选项gydF4y2Ba

用20个小批量进行1500次迭代的培训。属性指定迭代之后以最佳验证精度保存模型gydF4y2BaSaveBestAfterIterationgydF4y2Ba参数。gydF4y2Ba

指定余弦退火学习速率计划[gydF4y2Ba3.gydF4y2Ba]参数。对于两个网络,使用:gydF4y2Ba

最低学习率为1e-4。gydF4y2Ba

最大学习率为1e-3。gydF4y2Ba

余弦迭代数为300、500和700,之后学习率计划周期重新开始。的选项gydF4y2Ba

CosineNumIterationsgydF4y2Ba定义每个余弦循环的宽度。gydF4y2Ba

指定SGDM优化的参数。对于每个RGB和光流网络,在训练开始时初始化SGDM优化参数。对于两个网络,使用:gydF4y2Ba

增长势头为0.9。gydF4y2Ba

初始化为的初始速度参数gydF4y2Ba

[]gydF4y2Ba.gydF4y2BaL2正则化因子为0.0005。gydF4y2Ba

指定使用并行池在后台分派数据。如果gydF4y2BaDispatchInBackgroundgydF4y2Ba设置为true时,打开具有指定数量的并行工作人员的并行池,并创建gydF4y2BaDispatchInBackgroundDatastoregydF4y2Ba,它在后台分派数据,以使用异步数据加载和预处理加速训练。默认情况下,本例使用GPU(如果GPU可用)。否则,使用CPU。使用GPU需要并行计算工具箱™和支持CUDA®的NVIDIA®GPU。有关支持的计算能力的信息,请参见金宝appgydF4y2BaGPU支金宝app持情况gydF4y2Ba(并行计算工具箱)gydF4y2Ba.gydF4y2Ba

参数个数。类=类;参数个数。MiniBatchSize = 20;参数个数。NumIterations = 1500;参数个数。SaveBestAfterIteration = 900;参数个数。CosineNumIterations = [300, 500, 700]; params.MinLearningRate = 1e-4; params.MaxLearningRate = 1e-3; params.Momentum = 0.9; params.VelocityRGB = []; params.VelocityFlow = []; params.L2Regularization = 0.0005; params.ProgressPlot = false; params.Verbose = true; params.ValidationData = dsVal; params.DispatchInBackground = false; params.NumWorkers = 4;

列车网络的gydF4y2Ba

使用RGB数据和光流数据训练子网。设置gydF4y2BadoTraininggydF4y2Ba变量来gydF4y2Ba假gydF4y2Ba下载预先训练的子网,而不必等待训练完成。或者,如果您想训练子网,则设置gydF4y2BadoTraininggydF4y2Ba变量来gydF4y2Ba真正的gydF4y2Ba.gydF4y2Ba

doTraining = false;gydF4y2Ba

对于每个时代:gydF4y2Ba

在循环使用小批数据之前,先洗牌数据。gydF4y2Ba

使用gydF4y2Ba

minibatchqueuegydF4y2Ba在小批之间循环。支持函数金宝appgydF4y2BacreateMiniBatchQueuegydF4y2Ba,使用给定的训练数据存储创建gydF4y2BaminibatchqueuegydF4y2Ba.gydF4y2Ba使用验证数据gydF4y2Ba

dsValgydF4y2Ba来验证网络。gydF4y2Ba使用支持功能显示每个epoch的损失和准确性结果金宝appgydF4y2Ba

displayVerboseOutputEveryEpochgydF4y2Ba,列在本例的最后。gydF4y2Ba

为每个mini-batch:gydF4y2Ba

将图像数据或光流数据与标签转换为gydF4y2Ba

dlarraygydF4y2Ba基础类型为single的对象。gydF4y2Ba将视频和光流数据的时间维度视为空间维度之一,以便使用三维CNN进行处理。指定尺寸标签gydF4y2Ba

“SSSCB”gydF4y2Ba(空间、空间、空间、通道、批处理)为RGB或光流数据,并gydF4y2Ba“CB”gydF4y2Ba用于标签数据。gydF4y2Ba

的gydF4y2BaminibatchqueuegydF4y2Ba对象使用支持函数金宝appgydF4y2BabatchRGBAndFlowgydF4y2Ba,以批处理RGB和光流数据。gydF4y2Ba

modelFilename =gydF4y2Ba“I3D-RGBFlow——”gydF4y2Ba+ numClasses +gydF4y2Ba“Classes-hmdb51.mat”gydF4y2Ba;gydF4y2Ba如果gydF4y2BadoTraining epoch = 1;bestValAccuracy = 0;accTrain = [];accTrainRGB = [];accTrainFlow = [];lossTrain = [];迭代= 1;打乱= shuffleTrainDs (dsTrain);gydF4y2Ba%输出数量为三个:一个为RGB帧,一个为光流gydF4y2Ba%数据,一个用于地面真值标签。gydF4y2BanumOutputs = 3;mbq = createateminibatchqueue (shuffle, numOutputs, params); / /输出输出开始=抽搐;火车离站时刻表=开始;gydF4y2Ba%使用initializeTrainingProgressPlot和initializeVerboseOutputgydF4y2Ba%支金宝app持函数,列在示例的末尾,用于初始化gydF4y2Ba%训练进度图和详细输出显示训练gydF4y2Ba%损失,培训准确性和验证准确性。gydF4y2Ba策划者= initializeTrainingProgressPlot (params);initializeVerboseOutput (params);gydF4y2Ba而gydF4y2Ba迭代< =参数。NumIterationsgydF4y2Ba遍历数据集。gydF4y2Ba[dlX1 dlX2,海底]=下一个(兆贝可);gydF4y2Ba%使用dlfeval评估模型梯度和损失。gydF4y2Ba[gradRGB gradFlow,损失,acc, accRGB accFlow, stateRGB, stateFlow] =gydF4y2Ba...gydF4y2Badlfeval (@modelGradients、dlnetRGB dlnetFlow、dlX1 dlX2,海底);gydF4y2Ba%累积损失和准确性。gydF4y2Ba= [lost strain, loss];= [accTrain, acc];accTrainRGB = [accTrainRGB, accRGB];accTrainFlow = [accTrainFlow, accFlow];gydF4y2Ba%更新网络状态。gydF4y2BadlnetRGB。状态= stateRGB;dlnetFlow。状态= stateFlow;gydF4y2Ba%更新RGB和光流的梯度和参数gydF4y2Ba%使用SGDM优化器的子网。gydF4y2Ba[dlnetRGB, gradRGB,参数个数。VelocityRGB learnRate] =gydF4y2Ba...gydF4y2BaupdateDlNetwork (dlnetRGB gradRGB params, params.VelocityRGB,迭代);[dlnetFlow, gradFlow,参数个数。VelocityFlow] =gydF4y2Ba...gydF4y2BaupdateDlNetwork (dlnetFlow gradFlow params, params.VelocityFlow,迭代);gydF4y2Ba如果gydF4y2Ba~hasdata(mbq) || iteration == params. txt ()NumIterationsgydF4y2Ba当前epoch已完成。进行验证和更新进度。gydF4y2Ba火车离站时刻表= toc(火车离站时刻表);(cmat validationTime, lossValidation、accValidation accValidationRGB, accValidationFlow] =gydF4y2Ba...gydF4y2BadoValidation (params dlnetRGB dlnetFlow);gydF4y2Ba%更新培训进度。gydF4y2BadisplayVerboseOutputEveryEpoch(参数、启动、learnRate时代,迭代,gydF4y2Ba...gydF4y2Ba意味着(accTrain),意味着(accTrainRGB),意味着(accTrainFlow),gydF4y2Ba...gydF4y2BaaccValidation、accValidationRGB accValidationFlow,gydF4y2Ba...gydF4y2Ba意思是(lossTrain) lossValidation,火车离站时刻表,validationTime);updateProgressPlot (params,策划者,时代,迭代,开始,意味着(lossTrain),意味着(accTrain) accValidation);gydF4y2Ba%保存模型与训练的dlnetwork和精度值。gydF4y2Ba%使用saveData支持函数,列在金宝appgydF4y2Ba%结束本例。gydF4y2Ba如果gydF4y2Ba迭代> =参数。SaveBestAfterIterationgydF4y2Ba如果gydF4y2BabestValAccuracy = accValidation;saveData(modelFilename, dlnetRGB, dlnetFlow, cmat, accValidation);gydF4y2Ba结束gydF4y2Ba结束gydF4y2Ba结束gydF4y2Ba如果gydF4y2Ba~hasdata(mbq) && iteration < params。NumIterationsgydF4y2Ba当前epoch已完成。初始化训练损失,准确性gydF4y2Ba%值和下一个epoch的minibatchqueue。gydF4y2BaaccTrain = [];accTrainRGB = [];accTrainFlow = [];lossTrain = [];火车离站时刻表=抽搐;Epoch = Epoch + 1;打乱= shuffleTrainDs (dsTrain);numOutputs = 3;mbq = createateminibatchqueue (shuffle, numOutputs, params); / /输出输出gydF4y2Ba结束gydF4y2Ba迭代=迭代+ 1;gydF4y2Ba结束gydF4y2Ba%当训练完成时显示一条消息。gydF4y2BaendVerboseOutput (params);disp (gydF4y2Ba“模型保存到:”gydF4y2Ba+ modelFilename);gydF4y2Ba结束gydF4y2Ba%下载预训练模型和视频文件进行预测。gydF4y2Ba文件名=gydF4y2Ba“activityRecognition-I3D-HMDB51.zip”gydF4y2Ba;downloadURL =gydF4y2Ba“https://ssd.mathworks.com/金宝appsupportfiles/vision/data/”gydF4y2Ba+文件名;文件名= fullfile (downloadFolder,文件名);gydF4y2Ba如果gydF4y2Ba~存在(文件名,gydF4y2Ba“文件”gydF4y2Ba) disp (gydF4y2Ba“下载预先训练过的网络……”gydF4y2Ba);websave(文件名,downloadURL);gydF4y2Ba结束gydF4y2Ba%解压内容到下载文件夹。gydF4y2Ba解压缩(文件名,downloadFolder);gydF4y2Ba如果gydF4y2Ba~doTraining modelFilename = fullfile(downloadFolder, modelFilename);gydF4y2Ba结束gydF4y2Ba

评估培训网络gydF4y2Ba

使用测试数据集来评估训练的子网络的准确性。gydF4y2Ba

加载在训练中保存的最佳模型。gydF4y2Ba

d =负载(modelFilename);dlnetRGB = d.data.dlnetRGB;dlnetFlow = d.data.dlnetFlow;gydF4y2Ba

创建一个gydF4y2BaminibatchqueuegydF4y2Ba对象以加载批量测试数据。gydF4y2Ba

numOutputs = 3;兆贝可= createMiniBatchQueue(参数。V一个l我d一个t我onData, numOutputs, params);

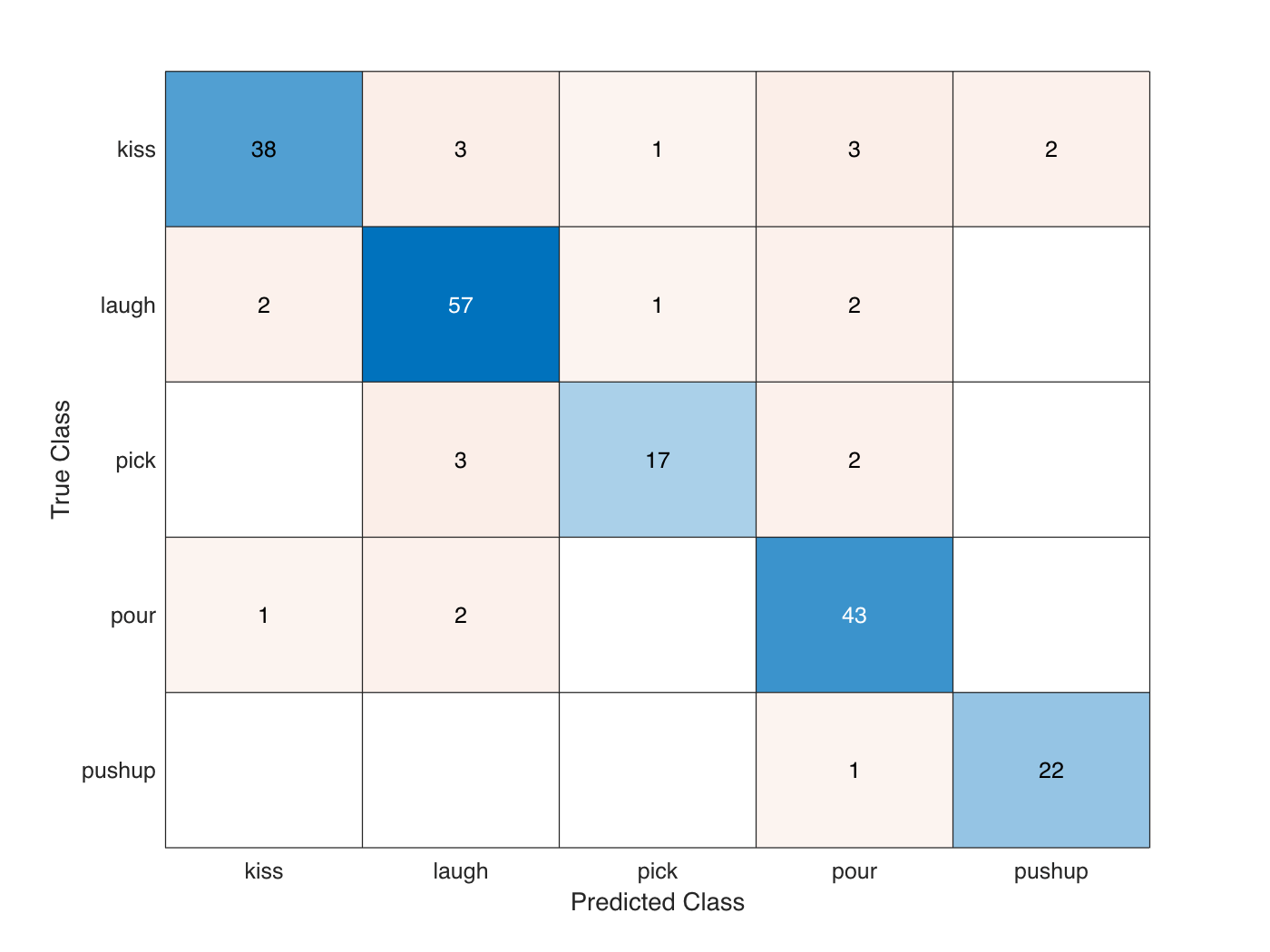

对每批测试数据,利用RGB和光流网络进行预测,取预测的平均值,利用混淆矩阵计算预测精度。gydF4y2Ba

cmat =稀疏(numClasses numClasses);gydF4y2Ba而gydF4y2Bahasdata(mbq) [dlRGB, dlFlow, dlY] = next(mbq);gydF4y2Ba%将视频输入作为RGB和光流数据通过gydF4y2Ba%两流子网络得到各自的预测。gydF4y2BadlYPredRGB =预测(dlnetRGB dlRGB);dlYPredFlow =预测(dlnetFlow dlFlow);gydF4y2Ba通过计算预测的平均值来融合预测。gydF4y2BadlYPred = (dlYPredRGB + dlYPredFlow)/2;gydF4y2Ba计算预测的准确性。gydF4y2Ba[~,欧美]= max(海底,[],1);[~, YPred] = max (dlYPred [], 1);欧美,cmat = aggregateConfusionMetric (cmat YPred);gydF4y2Ba结束gydF4y2Ba

计算训练网络的平均分类精度。gydF4y2Ba

accuracyEval =总和(诊断接头(cmat)。/笔(cmat,gydF4y2Ba“所有”gydF4y2Ba)gydF4y2Ba

accuracyEval = 0.60909gydF4y2Ba

显示混淆矩阵。gydF4y2Ba

Figure chart = confusionchart(cma,classes);gydF4y2Ba

由于训练样本数量有限,要将准确率提高到61%以上是一个挑战。为了提高网络的鲁棒性,需要用大数据集进行额外的训练。此外,在更大的数据集上进行预训练,例如KineticsgydF4y2Ba[1]gydF4y2Ba,可以帮助改善结果。gydF4y2Ba

使用新视频进行预测gydF4y2Ba

你现在可以使用训练过的网络来预测新视频中的动作。阅读并显示视频gydF4y2Bapour.avigydF4y2Ba使用gydF4y2BaVideoReadergydF4y2Ba和gydF4y2Ba愿景。V我deoPl一个yergydF4y2Ba.gydF4y2Ba

videoFilename = fullfile (downloadFolder,gydF4y2Ba“pour.avi”gydF4y2Ba);videoReader = videoReader (videoFilename);放像机= vision.VideoPlayer;放像机。Name =gydF4y2Ba“倒”gydF4y2Ba;gydF4y2Ba而gydF4y2BahasFrame(videoReader) frame = readFrame(videoReader);步骤(放像机、框架);gydF4y2Ba结束gydF4y2Ba释放(放像机);gydF4y2Ba

使用gydF4y2BareadRGBAndFlowgydF4y2Ba金宝app支持功能,列在本示例的最后,读取RGB和光流数据。gydF4y2Ba

isDataForValidation = true;readFcn = @ (f, u) readRGBAndFlow (f, u, inputStats isDataForValidation);gydF4y2Ba

read函数返回一个逻辑的gydF4y2Ba结束gydF4y2Ba值,指示是否有更多数据要从文件中读取。使用gydF4y2BabatchRGBAndFlowgydF4y2Ba金宝app支持函数,定义在本例的最后,批处理数据通过双流子网获得预测。gydF4y2Ba

hasdata = true;用户数据= [];YPred = [];gydF4y2Ba而gydF4y2Bahasdata [data,userdata,isDone] = readFcn(视频filename,userdata); / /用户名[dlRGB, dlFlow] = batchRGBAndFlow(数据(:1),数据(:,2),数据(:,3));gydF4y2Ba%通过视频输入作为RGB和光流数据通过双流gydF4y2Ba%子网络得到独立的预测。gydF4y2BadlYPredRGB =预测(dlnetRGB dlRGB);dlYPredFlow =预测(dlnetFlow dlFlow);gydF4y2Ba通过计算预测的平均值来融合预测。gydF4y2BadlYPred = (dlYPredRGB + dlYPredFlow)/2;[~, YPredCurr] = max (dlYPred [], 1);YPred = horzcat (YPred YPredCurr);hasdata = ~结束;gydF4y2Ba结束gydF4y2BaYPred = extractdata (YPred);gydF4y2Ba

计算正确预测的次数gydF4y2BahistcountsgydF4y2Ba,并使用正确预测的最大数量获取预测动作。gydF4y2Ba

类= params.Classes;数量= histcounts (YPred 1:元素个数(类));[~, clsIdx] = max(重要);action =类(clsIdx)gydF4y2Ba

action =“倒”gydF4y2Ba

金宝app支持功能gydF4y2Ba

inputStatisticsgydF4y2Ba

的gydF4y2BainputStatisticsgydF4y2Ba函数以包含HMDB51数据的文件夹名作为输入,计算RGB数据和光流数据的最小值和最大值。最小值和最大值被用作网络输入层的标准化输入。该函数还获取每个视频文件中的帧数,以便稍后在训练和测试网络时使用。为了找到不同数据集的最小值和最大值,请使用包含数据集的文件夹名称的此函数。gydF4y2Ba

函数gydF4y2Bads = createDatastore(dataFolder); / /数据存储ds。ReadFcn = @getMinMax;抽搐;tt =高(ds);varnames = {gydF4y2Ba“rgbMax”gydF4y2Ba,gydF4y2Ba“rgbMin”gydF4y2Ba,gydF4y2Ba“oflowMax”gydF4y2Ba,gydF4y2Ba“oflowMin”gydF4y2Ba};统计=收集(groupsummary (tt, [], {gydF4y2Ba“马克斯”gydF4y2Ba,gydF4y2Ba“最小值”gydF4y2Ba}, varnames));inputStats。文件名=收集(tt.Filename);inputStats。NumFrames =收集(tt.NumFrames);inputStats。rgbMax = stats.max_rgbMax; inputStats.rgbMin = stats.min_rgbMin; inputStats.oflowMax = stats.max_oflowMax; inputStats.oflowMin = stats.min_oflowMin; save(“inputStatistics.mat”gydF4y2Ba,gydF4y2Ba“inputStats”gydF4y2Ba);toc;gydF4y2Ba结束gydF4y2Ba函数gydF4y2Badata = getMinMax(filename) reader = VideoReader(filename); / /读取数据opticFlow = opticalFlowFarneback;数据= [];gydF4y2Ba而gydF4y2BahasFrame(reader) frame = readFrame(reader);[rgb, oflow] = findMinMax(框架、opticFlow);data = assignMinMax(data, rgb, oflow);gydF4y2Ba结束gydF4y2BatotalFrames =地板(读者。持续时间* reader.FrameRate);totalFrames = min(totalFrames, reader.NumFrames);[labelName, filename] = getLabelFilename(filename);数据。Filename = fullfile(labelName, Filename);数据。NumFrames = totalFrames;data = struct2table(数据、gydF4y2Ba“AsArray”gydF4y2Ba,真正的);gydF4y2Ba结束gydF4y2Ba函数gydF4y2Badata = assignMinMax(data, rgb, oflow)gydF4y2Ba如果gydF4y2Baisempty(数据)的数据。rgbMax = rgb.Max;数据。rgbMin = rgb.Min; data.oflowMax = oflow.Max; data.oflowMin = oflow.Min;返回gydF4y2Ba;gydF4y2Ba结束gydF4y2Ba数据。rgbMax = max(data.rgbMax, rgb.Max); data.rgbMin = min(data.rgbMin, rgb.Min); data.oflowMax = max(data.oflowMax, oflow.Max); data.oflowMin = min(data.oflowMin, oflow.Min);结束gydF4y2Ba函数gydF4y2Ba[rgbMinMax,oflowMinMax] = findMinMax(rgb, opticFlow)Max = Max (rgb, [], [1,2]);rgbMinMax。Min = Min (rgb, [], [1,2]);灰色= rgb2gray (rgb);流= estimateFlow (opticFlow、灰色);oflow =猫(3 flow.Vx flow.Vy flow.Magnitude);oflowMinMax。Max = Max (oflow [], [1,2]);oflowMinMax。Min = Min (oflow [], [1,2]);gydF4y2Ba结束gydF4y2Ba函数gydF4y2Bads = createDatastore(folder)gydF4y2Ba...gydF4y2Ba“IncludeSubfolders”gydF4y2Ba,真的,gydF4y2Ba...gydF4y2Ba“FileExtensions”gydF4y2Ba,gydF4y2Ba“.avi”gydF4y2Ba,gydF4y2Ba...gydF4y2Ba“UniformRead”gydF4y2Ba,真的,gydF4y2Ba...gydF4y2Ba“ReadFcn”gydF4y2Ba, @getMinMax);disp (gydF4y2Ba”NumFiles:“gydF4y2Ba+元素个数(ds.Files));gydF4y2Ba结束gydF4y2Ba

createFileDatastoregydF4y2Ba

的gydF4y2BacreateFileDatastoregydF4y2Ba函数创建一个gydF4y2BaFileDatastoregydF4y2Ba对象使用给定的文件名。的gydF4y2BaFileDatastoregydF4y2Ba对象将数据读入gydF4y2Ba“partialfile”gydF4y2Ba模式,因此每次读取可以返回部分读取的帧从视频。这个功能有助于读取大的视频文件,如果所有的帧都不适合内存。gydF4y2Ba

函数gydF4y2BareadFcn = @(f,u)readRGBAndFlow(f,u,inputStats,isDataForValidation); / /数据存储数据存储= fileDatastore(文件名,gydF4y2Ba...gydF4y2Ba“ReadFcn”gydF4y2BareadFcn,gydF4y2Ba...gydF4y2Ba“ReadMode”gydF4y2Ba,gydF4y2Ba“partialfile”gydF4y2Ba);gydF4y2Ba结束gydF4y2Ba

readRGBAndFlowgydF4y2Ba

的gydF4y2BareadRGBAndFlowgydF4y2Ba函数读取给定视频文件的RGB帧、相应的光流数据和标签值。在训练过程中,read函数根据网络输入的大小读取特定的帧数,并随机选择起始帧。光流数据从视频文件开始计算,但跳过,直到到达起始帧。在测试过程中,依次读取所有帧,计算相应的光流数据。RGB帧和光流数据被随机裁剪为训练所需的网络输入大小,并被中心裁剪以进行测试和验证。gydF4y2Ba

函数gydF4y2Ba(数据、用户数据做)= readRGBAndFlow(文件名,用户数据、inputStats isDataForValidation)gydF4y2Ba如果gydF4y2Baisempty(用户数据)用户数据。re一个der=VideoReader(f我len一个米e);用户数据。batchesRead = 0; userdata.opticalFlow = opticalFlowFarneback; [totalFrames,userdata.label] = getTotalFramesAndLabel(inputStats,filename);如果gydF4y2Baisempty(totalFrames) totalFrames = floor(userdata.reader。持续时间* userdata.reader.FrameRate);totalFrames = min(totalFrames, userdata.reader.NumFrames);gydF4y2Ba结束gydF4y2Ba用户数据。来t一个lFrames = totalFrames;结束gydF4y2Ba读者= userdata.reader;totalFrames = userdata.totalFrames;标签= userdata.label;batchesRead = userdata.batchesRead;opticalFlow = userdata.opticalFlow;inputSize = inputStats.inputSize;H = inputSize (1);W = inputSize (2);rgbC = 3;flowC = 2; numFrames = inputSize(3);如果gydF4y2BanumFrames > totalFrames numbatch = 1;gydF4y2Ba其他的gydF4y2BanumBatches =地板(totalFrames / numFrames);gydF4y2Ba结束gydF4y2BaimH = userdata.reader.Height;世界地图= userdata.reader.Width;imsz = (imH,世界地图);gydF4y2Ba如果gydF4y2Ba~isDataForValidation augmentFcn = augmentTransform([imsz,3]);= randomCropWindow2d(imsz, inputSize(1:2));gydF4y2Ba% 1。随机选择所需的帧数,gydF4y2Ba%从一个特定的帧随机开始。gydF4y2Ba如果gydF4y2BanumFrames >= totalFrames idx = 1:totalFrames;gydF4y2Ba%添加更多的帧来填充网络输入的大小。gydF4y2Ba附加=装天花板(numFrames / totalFrames);idx = repmat (idx 1额外的);idx = idx (1: numFrames);gydF4y2Ba其他的gydF4y2BastartIdx = randperm(totalFrames - numFrames);startIdx = startIdx (1);endIdx = startIdx + numFrames - 1;idx = startIdx: endIdx;gydF4y2Ba结束gydF4y2Ba视频= 0 (H, W, rgbC numFrames);oflow = 0 (H, W, flowC numFrames);i = 1;gydF4y2Ba%丢弃第一组帧来初始化光流。gydF4y2Ba为gydF4y2BaIi = 1:idx(1)-1 frame = read(reader, Ii);getRGBAndFlow(框架、opticalFlow augmentFcn cropWindow);gydF4y2Ba结束gydF4y2Ba%读取下一组训练所需的帧数。gydF4y2Ba为gydF4y2BaIi = idx frame = read(reader, Ii);[rgb, vxvy] = getRGBAndFlow(框架、opticalFlow augmentFcn, cropWindow);视频(::,:,i) = rgb;oflow (::,:, i) = vxvy;I = I + 1;gydF4y2Ba结束gydF4y2Ba其他的gydF4y2BaaugmentFcn = @(数据)(数据);= centerCropWindow2d(imsz, inputSize(1:2));探路者= min ([numFrames totalFrames]);视频= 0 (H, W, rgbC,探路者);oflow = 0 (H, W, flowC,探路者);i = 1;gydF4y2Ba而gydF4y2BahasFrame(reader) && i <= numFrames frame = readFrame(reader);[rgb, vxvy] = getRGBAndFlow(框架、opticalFlow augmentFcn, cropWindow);视频(::,:,i) = rgb;oflow (::,:, i) = vxvy;I = I + 1;gydF4y2Ba结束gydF4y2Ba如果gydF4y2BanumFrames > totalFrames附加= cell (numFrames/totalFrames);视频= repmat(视频,1,1,1,额外的);oflow = repmat (oflow, 1, 1, 1,额外的);视频=视频(:,:,:1:numFrames);oflow = oflow (:,:,: 1: numFrames);gydF4y2Ba结束gydF4y2Ba结束gydF4y2Ba网络期望视频和光流输入gydF4y2Ba%以下的dlarray格式:gydF4y2Ba% "SSSCB" ==>高度x宽度x帧x频道x批处理gydF4y2Ba%gydF4y2Ba%对数据进行置换gydF4y2Ba%的gydF4y2Ba%高x宽x频道x帧gydF4y2Ba%,gydF4y2Ba%高x宽x帧x频道gydF4y2Ba视频= permute(视频,[1,2,4,3]);Oflow = permute(Oflow, [1,2,4,3]);Data = {video, oflow, label};batchesRead = batchesRead + 1;用户数据。b一个tchesRead = batchesRead;%设置done标志为true,如果读取器已经读取了所有帧或gydF4y2Ba如果是训练。gydF4y2Ba完成= batchesRead == numbatch || ~isDataForValidation;gydF4y2Ba结束gydF4y2Ba函数gydF4y2Ba[rgb,vxvy] = getRGBAndFlow(rgb,opticalFlow,augmentFcn,cropWindow) rgb = augmentFcn(rgb);灰色= rgb2gray (rgb);流= estimateFlow (opticalFlow、灰色);vxvy =猫(3 flow.Vx flow.Vy flow.Vy);rgb = imcrop(rgb, cropWindow);vxvy = imcrop(vxvy, cropWindow);vxvy = vxvy (:,:, 1:2);gydF4y2Ba结束gydF4y2Ba函数gydF4y2Ba[label,fname] = getLabelFilename(filename) [folder,name,ext] = fileparts(string(filename));[~,标签]= fileparts(文件夹);Fname = name + ext;标签=字符串(标签);帧=字符串(帧);gydF4y2Ba结束gydF4y2Ba函数gydF4y2Ba[totalFrames,label] = getTotalFramesAndLabel(info, filename) filename = info. filename;帧= info.NumFrames;[labelName, fname] = getLabelFilename(filename);idx = strcmp(filename, fullfile(labelName,fname));totalFrames =帧(idx);label = categorical(string(labelName), string(info.Classes));gydF4y2Ba结束gydF4y2Ba

augmentTransformgydF4y2Ba

的gydF4y2BaaugmentTransformgydF4y2Ba函数创建具有随机左右翻转和缩放因子的增强方法。gydF4y2Ba

函数gydF4y2BaaugmentFcn = augmentTransform(深圳)gydF4y2Ba%随机翻转和缩放图像。gydF4y2Batform = randomAffine2d (gydF4y2Ba“XReflection”gydF4y2Ba,真的,gydF4y2Ba“规模”gydF4y2Ba1.1 [1]);tform溃败= affineOutputView(深圳,gydF4y2Ba“BoundsStyle”gydF4y2Ba,gydF4y2Ba“CenterOutput”gydF4y2Ba);augmentFcn = @(数据)augmentData(数据、tform溃败);gydF4y2Ba函数gydF4y2Badata = augmentData(data,tform,rout)gydF4y2Ba“OutputView”gydF4y2Ba,溃败);gydF4y2Ba结束gydF4y2Ba结束gydF4y2Ba

modelGradientsgydF4y2Ba

的gydF4y2BamodelGradientsgydF4y2Ba函数接受一小批RGB数据作为输入gydF4y2BadlRGBgydF4y2Ba,对应的光流数据gydF4y2BadlFlowgydF4y2Ba,以及相应的目标gydF4y2Ba海底gydF4y2Ba,并返回相应的损失、损失相对于可学习参数的梯度和训练精度。要计算梯度,请计算gydF4y2BamodelGradientsgydF4y2Ba函数使用gydF4y2BadlfevalgydF4y2Ba在训练循环中起作用。gydF4y2Ba

函数gydF4y2Ba[gradientsRGB gradientsFlow,损失,acc, accRGB accFlow, stateRGB, stateFlow] = modelGradients (dlnetRGB、dlnetFlow dlRGB, dlFlow, Y)gydF4y2Ba%通过视频输入作为RGB和光流数据通过双流gydF4y2Ba%网络。gydF4y2Ba向前(dlYPredRGB stateRGB] = (dlnetRGB dlRGB);向前(dlYPredFlow stateFlow] = (dlnetFlow dlFlow);gydF4y2Ba计算熔合损耗,梯度和精度为两流gydF4y2Ba%的预测。gydF4y2BargbLoss = crossentropy (dlYPredRGB Y);flowLoss = crossentropy (dlYPredFlow Y);gydF4y2Ba减少损失。gydF4y2Ba损失=意味着([rgbLoss flowLoss]);gradientsRGB = dlgradient(损失、dlnetRGB.Learnables);gradientsFlow = dlgradient(损失、dlnetFlow.Learnables);gydF4y2Ba通过计算预测的平均值来融合预测。gydF4y2BadlYPred = (dlYPredRGB + dlYPredFlow)/2;gydF4y2Ba计算预测的准确性。gydF4y2Ba[~,欧美]= max (Y, [], 1);[~, YPred] = max (dlYPred [], 1);acc = gather(extractdata(sum(YTest == YPred)./numel(YTest)));gydF4y2Ba%计算RGB和流量预测的准确性。gydF4y2Ba[~,欧美]= max (Y, [], 1);[~, YPredRGB] = max (dlYPredRGB [], 1);[~, YPredFlow] = max (dlYPredFlow [], 1);accRGB = gather(extractdata(sum(YTest == YPredRGB)./numel(YTest))); / /提取数据accFlow = gather(extractdata(sum(YTest == YPredFlow)./numel(YTest))); / /提取数据gydF4y2Ba结束gydF4y2Ba

doValidationgydF4y2Ba

的gydF4y2BadoValidationgydF4y2Ba函数使用验证数据验证网络。gydF4y2Ba

函数gydF4y2Ba[validationTime, cmat, lossValidation, accValidation, accValidationRGB, accValidationFlow] = doValidation(params, dlnetRGB, dlnetFlow) validationTime = tic;numOutputs = 3;兆贝可= createMiniBatchQueue(参数。V一个l我d一个t我onData, numOutputs, params); lossValidation = []; numClasses = numel(params.Classes); cmat = sparse(numClasses,numClasses); cmatRGB = sparse(numClasses,numClasses); cmatFlow = sparse(numClasses,numClasses);而gydF4y2Bahasdata(mbq) [dlX1,dlX2,dlY] = next(mbq);[损失,欧美,YPred、YPredRGB YPredFlow] = predictValidation (dlnetRGB、dlnetFlow dlX1, dlX2,海底);lossValidation = (lossValidation、损失);欧美,cmat = aggregateConfusionMetric (cmat YPred);欧美,cmatRGB = aggregateConfusionMetric (cmatRGB YPredRGB);欧美,cmatFlow = aggregateConfusionMetric (cmatFlow YPredFlow);gydF4y2Ba结束gydF4y2BalossValidation =意味着(lossValidation);accValidation =总和(诊断接头(cmat)。/笔(cmat,gydF4y2Ba“所有”gydF4y2Ba);accValidationRGB =总和(诊断接头(cmatRGB)。/笔(cmatRGB,gydF4y2Ba“所有”gydF4y2Ba);accValidationFlow =总和(诊断接头(cmatFlow)。/笔(cmatFlow,gydF4y2Ba“所有”gydF4y2Ba);validationTime = toc (validationTime);gydF4y2Ba结束gydF4y2Ba

predictValidationgydF4y2Ba

的gydF4y2BapredictValidationgydF4y2Ba函数使用提供的方法计算损失和预测值gydF4y2BadlnetworkgydF4y2Ba对象为RGB和光流数据。gydF4y2Ba

函数gydF4y2Ba[损失,欧美,YPred、YPredRGB YPredFlow] = predictValidation (dlnetRGB、dlnetFlow dlRGB, dlFlow, Y)gydF4y2Ba将视频输入通过双流gydF4y2Ba%网络。gydF4y2BadlYPredRGB =预测(dlnetRGB dlRGB);dlYPredFlow =预测(dlnetFlow dlFlow);gydF4y2Ba分别计算两流的交叉熵gydF4y2Ba%输出。gydF4y2BargbLoss = crossentropy (dlYPredRGB Y);flowLoss = crossentropy (dlYPredFlow Y);gydF4y2Ba减少损失。gydF4y2Ba损失=意味着([rgbLoss flowLoss]);gydF4y2Ba通过计算预测的平均值来融合预测。gydF4y2BadlYPred = (dlYPredRGB + dlYPredFlow)/2;gydF4y2Ba计算预测的准确性。gydF4y2Ba[~,欧美]= max (Y, [], 1);[~, YPred] = max (dlYPred [], 1);[~, YPredRGB] = max (dlYPredRGB [], 1);[~, YPredFlow] = max (dlYPredFlow [], 1);gydF4y2Ba结束gydF4y2Ba

updateDlnetworkgydF4y2Ba

的gydF4y2BaupdateDlnetworkgydF4y2Ba函数更新提供的gydF4y2BadlnetworkgydF4y2Ba使用SGDM优化函数对具有梯度的对象和其他参数进行优化gydF4y2BasgdmupdategydF4y2Ba.gydF4y2Ba

函数gydF4y2Ba[dlnet、渐变速度,learnRate] = updateDlNetwork (dlnet、渐变参数、速度迭代)gydF4y2Ba使用余弦退火学习率计划确定学习率。gydF4y2BalearnRate = cosineAnnealingLearnRate(iteration, params);gydF4y2Ba%对权重应用L2正则化。gydF4y2Baidx = dlnet.Learnables.Parameter ==gydF4y2Ba“重量”gydF4y2Ba;梯度(idx,:) = dlupdate(@(g,w) g + params。l2Regularization*w, gradients(idx,:), dlnet.Learnables(idx,:));%使用SGDM优化器更新网络参数。gydF4y2Ba[dlnet, velocity] = sgdmupdate(dlnet, gradient, velocity, learnRate, params.Momentum);gydF4y2Ba结束gydF4y2Ba

cosineAnnealingLearnRategydF4y2Ba

的gydF4y2BacosineAnnealingLearnRategydF4y2Ba函数根据当前迭代次数、最小学习率、最大学习率和退火的迭代次数计算学习率[gydF4y2Ba3.gydF4y2Ba].gydF4y2Ba

函数gydF4y2Balr = cosineAnnealingLearnRate(iteration, params)gydF4y2Ba如果gydF4y2Ba迭代= =参数。NumIterationslr=参数个数。MinLearningRate;返回gydF4y2Ba;gydF4y2Ba结束gydF4y2BacosineNumIter = [0, params.CosineNumIterations];csum = cumsum (cosineNumIter);Block = find(csum >= iteration, 1,gydF4y2Ba“第一”gydF4y2Ba);cosineIter = iteration - csum(block - 1);annealingIteration = mod(cosiniter, cosineNumIter(block));cosineIteration = cosineNumIter(块);minR = params.MinLearningRate;maxR = params.MaxLearningRate;costiult = 1 + cos(pi * annealingIteration / coineiteration);lr = minR + (((maxR - minR) * cosMult / 2);gydF4y2Ba结束gydF4y2Ba

aggregateConfusionMetricgydF4y2Ba

的gydF4y2BaaggregateConfusionMetricgydF4y2Ba函数根据预测结果递增地填充混淆矩阵gydF4y2BaYPredgydF4y2Ba预期结果gydF4y2Ba欧美gydF4y2Ba.gydF4y2Ba

函数gydF4y2Bacmat = aggregateConfusionMetric(cmat,YTest,YPred) YTest = gather(extractdata(YTest));YPred =收集(extractdata (YPred));[m, n] =大小(cmat);cmat = cmat + full(稀疏(YTest,YPred,1,m,n));gydF4y2Ba结束gydF4y2Ba

createMiniBatchQueuegydF4y2Ba

的gydF4y2BacreateMiniBatchQueuegydF4y2Ba函数创建一个gydF4y2BaminibatchqueuegydF4y2Ba对象,该对象提供gydF4y2BaminiBatchSizegydF4y2Ba给定数据存储中的数据量。它还创建了gydF4y2BaDispatchInBackgroundDatastoregydF4y2Ba如果并行池打开。gydF4y2Ba

函数gydF4y2Bambq = createateminibatchqueue(数据存储,numOutputs, params)gydF4y2Ba如果gydF4y2Ba参数个数。DispatchInBackground & & isempty (gcp (gydF4y2Ba“nocreate”gydF4y2Ba))gydF4y2Ba%启动并行池,如果DispatchInBackground为true,则调度gydF4y2Ba%数据在后台使用并行池。gydF4y2Bac = parcluster (gydF4y2Ba“本地”gydF4y2Ba);c.NumWorkers = params.NumWorkers;parpool (gydF4y2Ba“本地”gydF4y2Ba, params.NumWorkers);gydF4y2Ba结束gydF4y2Bagcp (p =gydF4y2Ba“nocreate”gydF4y2Ba);gydF4y2Ba如果gydF4y2Ba~isempty(p) datastore = DispatchInBackgroundDatastore(datastore, p. numworkers);gydF4y2Ba结束gydF4y2BainputFormat (1: numOutputs-1) =gydF4y2Ba“SSSCB”gydF4y2Ba;outputFormat =gydF4y2Ba“CB”gydF4y2Ba;mbq = minibatchqueue(数据存储,numOutputs,gydF4y2Ba...gydF4y2Ba“MiniBatchSize”gydF4y2Ba,参数个数。MiniBatchSize,gydF4y2Ba...gydF4y2Ba“MiniBatchFcn”gydF4y2Ba@batchRGBAndFlow,gydF4y2Ba...gydF4y2Ba“MiniBatchFormat”gydF4y2BainputFormat, outputFormat]);gydF4y2Ba结束gydF4y2Ba

batchRGBAndFlowgydF4y2Ba

的gydF4y2BabatchRGBAndFlowgydF4y2Ba功能将图像、流程和标签数据批量成相应的数据gydF4y2BadlarraygydF4y2Ba值gydF4y2Ba“SSSCB”gydF4y2Ba,gydF4y2Ba“SSSCB”gydF4y2Ba,gydF4y2Ba“CB”gydF4y2Ba,分别。gydF4y2Ba

函数gydF4y2Ba[dlX1,dlX2,dlY] = batchRGBAndFlow(图像,流,标签)gydF4y2Ba批处理尺寸:5gydF4y2BaX1 =猫(5、图像{:});X2 =猫(5流{:});gydF4y2Ba批处理维度:2gydF4y2Ba标签=猫({}):2、标签;gydF4y2Ba%特征维度:1gydF4y2BaY = onehotencode(标签,1);gydF4y2Ba%将数据转换为single进行处理。gydF4y2BaX1 =单(X1);X2 =单(X2);Y =单(Y);gydF4y2Ba如果可能,将数据移动到GPU。gydF4y2Ba如果gydF4y2BacanUseGPU X1 = gpuArray(X1);X2 = gpuArray (X2);Y = gpuArray (Y);gydF4y2Ba结束gydF4y2Ba%返回X和Y作为dlarray对象。gydF4y2BadlX1 = dlarray (X1,gydF4y2Ba“SSSCB”gydF4y2Ba);dlX2 = dlarray (X2,gydF4y2Ba“SSSCB”gydF4y2Ba);海底= dlarray (Y,gydF4y2Ba“CB”gydF4y2Ba);gydF4y2Ba结束gydF4y2Ba

shuffleTrainDsgydF4y2Ba

的gydF4y2BashuffleTrainDsgydF4y2Ba函数将训练数据存储中的文件打乱gydF4y2BadsTraingydF4y2Ba.gydF4y2Ba

函数gydF4y2Bashuffled = shuffleTrainDs(dsTrain) shuffled = copy(dsTrain);n =元素个数(shuffled.Files);shuffledIndices = randperm (n);重新洗了一遍。文件= shuffled.Files (shuffledIndices);重置(重组);gydF4y2Ba结束gydF4y2Ba

saveDatagydF4y2Ba

的gydF4y2BasaveDatagydF4y2Ba函数保存给定的gydF4y2BadlnetworkgydF4y2Ba对象和精度值的MAT文件。gydF4y2Ba

函数gydF4y2BasaveData(modelFilename, dlnetRGB, dlnetFlow, cmat, accValidation) dlnetRGB = gatherFromGPUToSave(dlnetRGB);dlnetFlow = gatherFromGPUToSave (dlnetFlow);数据。V一个l我d一个t我onAccuracy = accValidation; data.cmat = cmat; data.dlnetRGB = dlnetRGB; data.dlnetFlow = dlnetFlow; save(modelFilename,“数据”gydF4y2Ba);gydF4y2Ba结束gydF4y2Ba

gatherFromGPUToSavegydF4y2Ba

的gydF4y2BagatherFromGPUToSavegydF4y2Ba函数从GPU采集数据,以便将模型保存到磁盘。gydF4y2Ba

函数gydF4y2Badlnet = gatherFromGPUToSave (dlnet)gydF4y2Ba如果gydF4y2Ba~ canUseGPUgydF4y2Ba返回gydF4y2Ba;gydF4y2Ba结束gydF4y2Badlnet。le一个rn一个ble年代=g一个therValues(dlnet.Learnables); dlnet.State = gatherValues(dlnet.State);函数gydF4y2Ba台= gatherValues(台)gydF4y2Ba为gydF4y2BaIi = 1:高度(tbl)V一个lue{ii} = gather(tbl.Value{ii});结束gydF4y2Ba结束gydF4y2Ba结束gydF4y2Ba

checkForHMDB51FoldergydF4y2Ba

的gydF4y2BacheckForHMDB51FoldergydF4y2Ba函数检查下载文件夹中下载的数据。gydF4y2Ba

函数gydF4y2Ba类= checkForHMDB51Folder(dataLoc) hmdbFolder = full (dataLoc,gydF4y2Ba“hmdb51_org”gydF4y2Ba);gydF4y2Ba如果gydF4y2Ba~存在(hmdbFoldergydF4y2Ba“dir”gydF4y2Ba)错误(gydF4y2Ba“在运行示例之前,使用支持函数‘downloadHMDB51’下载‘hmdb51_o金宝apprg.rar’文件,并提取RAR文件。”gydF4y2Ba);gydF4y2Ba结束gydF4y2Ba类= [gydF4y2Ba“brush_hair”gydF4y2Ba,gydF4y2Ba“车轮”gydF4y2Ba,gydF4y2Ba“抓”gydF4y2Ba,gydF4y2Ba“咀嚼”gydF4y2Ba,gydF4y2Ba“鼓掌”gydF4y2Ba,gydF4y2Ba“爬”gydF4y2Ba,gydF4y2Ba“climb_stairs”gydF4y2Ba,gydF4y2Ba...gydF4y2Ba“潜水”gydF4y2Ba,gydF4y2Ba“draw_sword”gydF4y2Ba,gydF4y2Ba“口水”gydF4y2Ba,gydF4y2Ba“喝”gydF4y2Ba,gydF4y2Ba“吃”gydF4y2Ba,gydF4y2Ba“fall_floor”gydF4y2Ba,gydF4y2Ba“击剑”gydF4y2Ba,gydF4y2Ba...gydF4y2Ba“flic_flac”gydF4y2Ba,gydF4y2Ba“高尔夫球”gydF4y2Ba,gydF4y2Ba“倒立”gydF4y2Ba,gydF4y2Ba“打”gydF4y2Ba,gydF4y2Ba“拥抱”gydF4y2Ba,gydF4y2Ba“跳”gydF4y2Ba,gydF4y2Ba“踢”gydF4y2Ba,gydF4y2Ba“kick_ball”gydF4y2Ba,gydF4y2Ba...gydF4y2Ba“吻”gydF4y2Ba,gydF4y2Ba“笑”gydF4y2Ba,gydF4y2Ba“选择”gydF4y2Ba,gydF4y2Ba“倒”gydF4y2Ba,gydF4y2Ba“引体向上”gydF4y2Ba,gydF4y2Ba“打”gydF4y2Ba,gydF4y2Ba“推”gydF4y2Ba,gydF4y2Ba“俯卧撑”gydF4y2Ba,gydF4y2Ba“ride_bike”gydF4y2Ba,gydF4y2Ba...gydF4y2Ba“ride_horse”gydF4y2Ba,gydF4y2Ba“运行”gydF4y2Ba,gydF4y2Ba“shake_hands”gydF4y2Ba,gydF4y2Ba“shoot_ball”gydF4y2Ba,gydF4y2Ba“shoot_bow”gydF4y2Ba,gydF4y2Ba“shoot_gun”gydF4y2Ba,gydF4y2Ba...gydF4y2Ba“坐”gydF4y2Ba,gydF4y2Ba“仰卧起坐”gydF4y2Ba,gydF4y2Ba“微笑”gydF4y2Ba,gydF4y2Ba“烟”gydF4y2Ba,gydF4y2Ba“筋斗”gydF4y2Ba,gydF4y2Ba“站”gydF4y2Ba,gydF4y2Ba“swing_baseball”gydF4y2Ba,gydF4y2Ba“剑”gydF4y2Ba,gydF4y2Ba...gydF4y2Ba“sword_exercise”gydF4y2Ba,gydF4y2Ba“交谈”gydF4y2Ba,gydF4y2Ba“扔”gydF4y2Ba,gydF4y2Ba“转”gydF4y2Ba,gydF4y2Ba“走”gydF4y2Ba,gydF4y2Ba“波”gydF4y2Ba];expectFolders = fullfile(hmdbFolder, classes);gydF4y2Ba如果gydF4y2Ba~所有(arrayfun (@ (x)存在(x,gydF4y2Ba“dir”gydF4y2Ba), expectFolders)错误(gydF4y2Ba"在运行示例之前,使用支持函数'downloadHMDB51'下载hm金宝appdb51_org.rar并提取RAR文件。"gydF4y2Ba);gydF4y2Ba结束gydF4y2Ba结束gydF4y2Ba

downloadHMDB51gydF4y2Ba

的gydF4y2BadownloadHMDB51gydF4y2Ba函数下载数据集并将其保存到一个目录。gydF4y2Ba

函数gydF4y2BadownloadHMDB51 (dataLoc)gydF4y2Ba如果gydF4y2Banargin == 0 dataLoc = pwd;gydF4y2Ba结束gydF4y2BadataLoc =字符串(dataLoc);gydF4y2Ba如果gydF4y2Ba~存在(dataLocgydF4y2Ba“dir”gydF4y2Bamkdir (dataLoc);gydF4y2Ba结束gydF4y2BadataUrl =gydF4y2Ba“http://serre-lab.clps.brown.edu/wp-content/uploads/2013/10/hmdb51_org.rar”gydF4y2Ba;选择= weboptions (gydF4y2Ba“超时”gydF4y2Ba、正);rarFileName = fullfile (dataLoc,gydF4y2Ba“hmdb51_org.rar”gydF4y2Ba);fileExists =存在(rarFileName,gydF4y2Ba“文件”gydF4y2Ba);gydF4y2Ba%下载RAR文件并保存到下载文件夹。gydF4y2Ba如果gydF4y2Ba~ fileExists disp (gydF4y2Ba下载hmdb51_org.rar (2gb)到文件夹:gydF4y2Ba) disp dataLoc disp (gydF4y2Ba“这个下载可能需要几分钟……”gydF4y2Ba) websave(rarFileName, dataUrl, options);disp (gydF4y2Ba“下载完成了。”gydF4y2Ba) disp (gydF4y2Ba"将hmdb51_org.rar文件内容解压到文件夹:"gydF4y2Ba) disp (dataLoc)gydF4y2Ba结束gydF4y2Ba结束gydF4y2Ba

initializeTrainingProgressPlotgydF4y2Ba

的gydF4y2BainitializeTrainingProgressPlotgydF4y2Ba该功能配置了两个图形,用于显示训练损失、训练准确性和验证准确性。gydF4y2Ba

函数gydF4y2Ba策划者= initializeTrainingProgressPlot (params)gydF4y2Ba如果gydF4y2Ba参数个数。Progre年代年代PlotgydF4y2Ba%绘制损失、训练准确性和验证准确性。gydF4y2Ba数字gydF4y2Ba%损失情节gydF4y2Ba次要情节(2,1,1)策划者。lo年代年代Plotter=一个n我米一个tedline; xlabel(“迭代”gydF4y2Ba) ylabel (gydF4y2Ba“损失”gydF4y2Ba)gydF4y2Ba%精度图gydF4y2Ba次要情节(2,1,2)策划者。TrainAccPlotter = animatedline (gydF4y2Ba“颜色”gydF4y2Ba,gydF4y2Ba“b”gydF4y2Ba);策划者。V一个lAccPlotter = animatedline(“颜色”gydF4y2Ba,gydF4y2Ba‘g’gydF4y2Ba);传奇(gydF4y2Ba“训练的准确性”gydF4y2Ba,gydF4y2Ba“验证准确性”gydF4y2Ba,gydF4y2Ba“位置”gydF4y2Ba,gydF4y2Ba“西北”gydF4y2Ba);包含(gydF4y2Ba“迭代”gydF4y2Ba) ylabel (gydF4y2Ba“准确性”gydF4y2Ba)gydF4y2Ba其他的gydF4y2Ba策划者= [];gydF4y2Ba结束gydF4y2Ba结束gydF4y2Ba

initializeVerboseOutputgydF4y2Ba

的gydF4y2BainitializeVerboseOutputgydF4y2Ba函数显示训练值表的列标题,其中显示epoch、小批量精度和其他训练值。gydF4y2Ba

函数gydF4y2BainitializeVerboseOutput (params)gydF4y2Ba如果gydF4y2Ba参数个数。Verbo年代edisp (gydF4y2Ba”“gydF4y2Ba)gydF4y2Ba如果gydF4y2BacanUseGPU disp (gydF4y2Ba“训练在GPU上。”gydF4y2Ba)gydF4y2Ba其他的gydF4y2Badisp (gydF4y2Ba“训练在CPU上。”gydF4y2Ba)gydF4y2Ba结束gydF4y2Bagcp (p =gydF4y2Ba“nocreate”gydF4y2Ba);gydF4y2Ba如果gydF4y2Ba~ isempty (p) disp (gydF4y2Ba“并行集群训练”gydF4y2Ba+ p.Cluster.Profile +gydF4y2Ba”’。”gydF4y2Ba)gydF4y2Ba结束gydF4y2Badisp (gydF4y2Ba”NumIterations:“gydF4y2Ba+字符串(params.NumIterations));disp (gydF4y2Ba”MiniBatchSize:“gydF4y2Ba+字符串(params.MiniBatchSize));disp (gydF4y2Ba“类:”gydF4y2Ba+加入(字符串(params.Classes),gydF4y2Ba","gydF4y2Ba));disp (gydF4y2Ba"|=======================================================================================================================================================================|"gydF4y2Ba) disp (gydF4y2Ba"| Epoch | Iteration | Time Elapsed | Mini-Batch Accuracy | Validation Accuracy | Mini-Batch | Validation | Base Learning | Train Time | Validation Time |"gydF4y2Ba) disp (gydF4y2Ba“| | | (hh: mm: ss) | (Avg: RGB:流)| (Avg: RGB:流)| | | |率损失损失(hh: mm: ss) | (hh: mm: ss) |”gydF4y2Ba) disp (gydF4y2Ba"|=======================================================================================================================================================================|"gydF4y2Ba)gydF4y2Ba结束gydF4y2Ba结束gydF4y2Ba

displayVerboseOutputEveryEpochgydF4y2Ba

的gydF4y2BadisplayVerboseOutputEveryEpochgydF4y2Ba函数显示训练值的详细输出,如epoch、小批精度、验证精度和小批损失。gydF4y2Ba

函数gydF4y2BadisplayVerboseOutputEveryEpoch(参数、启动、learnRate时代,迭代,gydF4y2Ba...gydF4y2BaaccTrain、accTrainRGB accTrainFlow, accValidation、accValidationRGB accValidationFlow, lossTrain, lossValidation,火车离站时刻表,validationTime)gydF4y2Ba如果gydF4y2Ba参数个数。Verbo年代eD =持续时间(0,0,toc(开始),gydF4y2Ba“格式”gydF4y2Ba,gydF4y2Ba“hh: mm: ss”gydF4y2Ba);火车离站时刻表=持续时间(0,0,火车离站时刻表,gydF4y2Ba“格式”gydF4y2Ba,gydF4y2Ba“hh: mm: ss”gydF4y2Ba);validationTime =持续时间(0,0,validationTime,gydF4y2Ba“格式”gydF4y2Ba,gydF4y2Ba“hh: mm: ss”gydF4y2Ba);lossValidation =收集(extractdata (lossValidation));lossValidation =组成(gydF4y2Ba“% .4f”gydF4y2Ba, lossValidation);accValidation = composePadAccuracy (accValidation);accValidationRGB = composePadAccuracy (accValidationRGB);accValidationFlow = composePadAccuracy (accValidationFlow);accVal =加入([accValidation、accValidationRGB accValidationFlow),gydF4y2Ba”:“gydF4y2Ba);lossTrain =收集(extractdata (lossTrain));lossTrain =组成(gydF4y2Ba“% .4f”gydF4y2Ba, lossTrain);accTrain = composePadAccuracy (accTrain);accTrainRGB = composePadAccuracy (accTrainRGB);accTrainFlow = composePadAccuracy (accTrainFlow);accTrain =加入([accTrain、accTrainRGB accTrainFlow),gydF4y2Ba”:“gydF4y2Ba);learnRate =组成(gydF4y2Ba“% .13f”gydF4y2Ba, learnRate);disp (gydF4y2Ba“|”gydF4y2Ba+gydF4y2Ba...gydF4y2Ba垫(string(时代),5,gydF4y2Ba“两个”gydF4y2Ba) +gydF4y2Ba“|”gydF4y2Ba+gydF4y2Ba...gydF4y2Ba垫(字符串(迭代)9gydF4y2Ba“两个”gydF4y2Ba) +gydF4y2Ba“|”gydF4y2Ba+gydF4y2Ba...gydF4y2Ba垫(string (D) 12gydF4y2Ba“两个”gydF4y2Ba) +gydF4y2Ba“|”gydF4y2Ba+gydF4y2Ba...gydF4y2Ba垫(string (accTrain), 26岁,gydF4y2Ba“两个”gydF4y2Ba) +gydF4y2Ba“|”gydF4y2Ba+gydF4y2Ba...gydF4y2Ba垫(string (accVal), 26岁,gydF4y2Ba“两个”gydF4y2Ba) +gydF4y2Ba“|”gydF4y2Ba+gydF4y2Ba...gydF4y2Ba垫(string (lossTrain) 10gydF4y2Ba“两个”gydF4y2Ba) +gydF4y2Ba“|”gydF4y2Ba+gydF4y2Ba...gydF4y2Ba垫(string (lossValidation) 10gydF4y2Ba“两个”gydF4y2Ba) +gydF4y2Ba“|”gydF4y2Ba+gydF4y2Ba...gydF4y2Ba垫(string (learnRate), 13日gydF4y2Ba“两个”gydF4y2Ba) +gydF4y2Ba“|”gydF4y2Ba+gydF4y2Ba...gydF4y2Ba垫(string(火车离站时刻表)10gydF4y2Ba“两个”gydF4y2Ba) +gydF4y2Ba“|”gydF4y2Ba+gydF4y2Ba...gydF4y2Ba垫(string (validationTime) 15gydF4y2Ba“两个”gydF4y2Ba) +gydF4y2Ba“|”gydF4y2Ba)gydF4y2Ba结束gydF4y2Ba结束gydF4y2Ba函数gydF4y2Baacc = compsepadaccuracy (acc)gydF4y2Ba“% .2f”gydF4y2Ba, 100年acc *) +gydF4y2Ba“%”gydF4y2Ba;acc =垫(字符串(acc) 6gydF4y2Ba“左”gydF4y2Ba);gydF4y2Ba结束gydF4y2Ba

endVerboseOutputgydF4y2Ba

的gydF4y2BaendVerboseOutputgydF4y2Ba函数显示训练期间详细输出的结束。gydF4y2Ba

函数gydF4y2BaendVerboseOutput (params)gydF4y2Ba如果gydF4y2Ba参数个数。Verbo年代edisp (gydF4y2Ba"|=======================================================================================================================================================================|"gydF4y2Ba)gydF4y2Ba结束gydF4y2Ba结束gydF4y2Ba

updateProgressPlotgydF4y2Ba

的gydF4y2BaupdateProgressPlotgydF4y2Ba功能更新进度图与损失和准确性的信息在培训期间。gydF4y2Ba

函数gydF4y2BaupdateProgressPlot (params,策划者,时代,迭代,开始,lossTrain, accuracyTrain, accuracyValidation)gydF4y2Ba如果gydF4y2Ba参数个数。Progre年代年代PlotgydF4y2Ba%更新培训进度。gydF4y2BaD =持续时间(0,0,toc(开始),gydF4y2Ba“格式”gydF4y2Ba,gydF4y2Ba“hh: mm: ss”gydF4y2Ba);标题(plotters.LossPlotter.Parent,gydF4y2Ba”时代:“gydF4y2Ba+时代+gydF4y2Ba”,过去:“gydF4y2Ba+字符串(D));addpoints (plotters.LossPlotter、迭代、双(收集(extractdata (lossTrain))));addpoints (plotters.TrainAccPlotter迭代,accuracyTrain);addpoints (plotters.ValAccPlotter迭代,accuracyValidation);drawnowgydF4y2Ba结束gydF4y2Ba结束gydF4y2Ba

参考文献gydF4y2Ba

卡雷拉,若昂和安德鲁·齐瑟曼。“Quo Vadis, Action Recognition?”一个新的模型和动力学数据集。”gydF4y2Ba计算机视觉与模式识别会议论文集gydF4y2Ba(CVPR): 6299 ? 6308。火奴鲁鲁,HI: IEEE, 2017。gydF4y2Ba

[2] Simonyan, Karen和Andrew Zisserman。视频中动作识别的双流卷积网络gydF4y2Ba神经信息处理系统研究进展gydF4y2Ba27,加州长滩:NIPS, 2017。gydF4y2Ba

Loshchilov, Ilya和Frank Hutter。SGDR:随机梯度下降与暖重启。gydF4y2Ba2017国际学习表示会议gydF4y2Ba.法国土伦:ICLR, 2017。gydF4y2Ba