fit

Description

Thefitfunction fits a configured naive Bayes classification model for incremental learning (incrementalClassificationNaiveBayesobject) to streaming data. To additionally track performance metrics using the data as it arrives, useupdateMetricsAndFitinstead.

To fit or cross-validate a naive Bayes classification model to an entire batch of data at once, seefitcnb.

Mdl= fit(Mdl,X,Y)Mdl, which represents the input naive Bayes classification model for incremental learningMdltrained using the predictor and response data,XandYrespectively. Specifically,fitupdates the conditional posterior distribution of the predictor variables given the data.

Examples

Incrementally Train Model with Little Prior Information

当你kn适合增量朴素贝叶斯的学习者ow only the expected maximum number of classes in the data.

Create an incremental naive Bayes model. Specify that the maximum number of expected classes is 5.

Mdl = incrementalClassificationNaiveBayes('MaxNumClasses',5)

Mdl = incrementalClassificationNaiveBayes IsWarm: 0 Metrics: [1x2 table] ClassNames: [1x0 double] ScoreTransform: 'none' DistributionNames: 'normal' DistributionParameters: {} Properties, Methods

Mdlis anincrementalClassificationNaiveBayesmodel. All its properties are read-only.Mdlcan process at most 5 unique classes. By default, the prior class distributionMdl.Prioris empirical, which means the software updates the prior distribution as it encounters labels.

Mdlmust be fit to data before you can use it to perform any other operations.

Load the human activity data set. Randomly shuffle the data.

loadhumanactivityn = numel(actid); rng(1)% For reproducibilityidx = randsample(n,n); X = feat(idx,:); Y = actid(idx);

For details on the data set, enterDescriptionat the command line.

Fit the incremental model to the training data, in chunks of 50 observations at a time, by using thefitfunction. At each iteration:

Simulate a data stream by processing 50 observations.

Overwrite the previous incremental model with a new one fitted to the incoming observations.

Store the mean of the first predictor in the first class and the prior probability that the subject is moving (

Y> 2) to see how these parameters evolve during incremental learning.

% PreallocationnumObsPerChunk = 50; nchunk = floor(n/numObsPerChunk); mu11 = zeros(nchunk,1); priormoved = zeros(nchunk,1);% Incremental fittingforj = 1:nchunk ibegin = min(n,numObsPerChunk*(j-1) + 1); iend = min(n,numObsPerChunk*j); idx = ibegin:iend; Mdl = fit(Mdl,X(idx,:),Y(idx)); mu11(j) = Mdl.DistributionParameters{1,1}(1); priormoved(j) = sum(Mdl.Prior(Mdl.ClassNames > 2));end

Mdlis anincrementalClassificationNaiveBayesmodel object trained on all the data in the stream.

To see how the parameters evolve during incremental learning, plot them on separate tiles.

t = tiledlayout(2,1); nexttile plot(mu11) ylabel('\mu_{11}') xlabel('Iteration') axistightnexttile情节(priormoved) ylabel ('\pi(Subject Is Moving)') xlabel(t,'Iteration') axistight

fit作表语用更新后的意思tor distribution as it processes each chunk. Because the prior class distribution is empirical,

(subject is moving) changes asfitprocesses each chunk.

Specify All Class Names Before Fitting

当你kn适合增量朴素贝叶斯的学习者ow all the class names in the data.

Consider training a device to predict whether a subject is sitting, standing, walking, running, or dancing based on biometric data measured on the subject. The class names map 1 through 5 to an activity. Also, suppose that the researchers plan to expose the device to each class uniformly.

Create an incremental naive Bayes model for multiclass learning. Specify the class names and the uniform prior class distribution.

classnames = 1:5; Mdl = incrementalClassificationNaiveBayes('ClassNames',classnames,'Prior','uniform')

Mdl = incrementalClassificationNaiveBayes IsWarm: 0 Metrics: [1x2 table] ClassNames: [1 2 3 4 5] ScoreTransform: 'none' DistributionNames: 'normal' DistributionParameters: {5x0 cell} Properties, Methods

Mdlis anincrementalClassificationNaiveBayesmodel object. All its properties are read-only. During training, observed labels must be inMdl.ClassNames.

Mdlmust be fit to data before you can use it to perform any other operations.

Load the human activity data set. Randomly shuffle the data.

loadhumanactivityn = numel(actid); rng(1);% For reproducibilityidx = randsample(n,n); X = feat(idx,:); Y = actid(idx);

For details on the data set, enterDescriptionat the command line.

Fit the incremental model to the training data by using thefitfunction. Simulate a data stream by processing chunks of 50 observations at a time. At each iteration:

Process 50 observations.

Overwrite the previous incremental model with a new one fitted to the incoming observations.

Store the mean of the first predictor in the first class and the prior probability that the subject is moving (

Y> 2) to see how these parameters evolve during incremental learning.

% PreallocationnumObsPerChunk = 50; nchunk = floor(n/numObsPerChunk); mu11 = zeros(nchunk,1); priormoved = zeros(nchunk,1);% Incremental fittingforj = 1:nchunk ibegin = min(n,numObsPerChunk*(j-1) + 1); iend = min(n,numObsPerChunk*j); idx = ibegin:iend; Mdl = fit(Mdl,X(idx,:),Y(idx)); mu11(j) = Mdl.DistributionParameters{1,1}(1); priormoved(j) = sum(Mdl.Prior(Mdl.ClassNames > 2));end

Mdlis anincrementalClassificationNaiveBayesmodel object trained on all the data in the stream.

To see how the parameters evolve during incremental learning, plot them on separate tiles.

t = tiledlayout(2,1); nexttile plot(mu11) ylabel('\mu_{11}') xlabel('Iteration') axistightnexttile情节(priormoved) ylabel ('\pi(Subject Is Moving)') xlabel(t,'Iteration') axistight

fit作表语用更新后的意思tor distribution as it processes each chunk. Because the prior class distribution is specified as uniform,

(subject is moving) = 0.6 and does not change asfitprocesses each chunk.

Specify Observation Weights

Train a naive Bayes classification model by usingfitcnb, convert it to an incremental learner, track its performance on streaming data, and then fit the model to the data. Specify observation weights.

Load and Preprocess Data

Load the human activity data set. Randomly shuffle the data.

loadhumanactivityrng(1);% For reproducibilityn = numel(actid); idx = randsample(n,n); X = feat(idx,:); Y = actid(idx);

For details on the data set, enterDescriptionat the command line.

Suppose that the data from a stationary subject (Y<= 2) has double the quality of the data from a moving subject. Create a weight variable that assigns a weight of 2 to observations from a stationary subject and 1 to a moving subject.

W = ones(n,1) + (Y <=2);

Train Naive Bayes Classification Model

Fit a naive Bayes classification model to a random sample of half the data.

idxtt = randsample([true false],n,true); TTMdl = fitcnb(X(idxtt,:),Y(idxtt),“重量”,W(idxtt))

TTMdl = ClassificationNaiveBayes ResponseName: 'Y' CategoricalPredictors: [] ClassNames: [1 2 3 4 5] ScoreTransform: 'none' NumObservations: 12053 DistributionNames: {1x60 cell} DistributionParameters: {5x60 cell} Properties, Methods

TTMdlis aClassificationNaiveBayesmodel object representing a traditionally trained naive Bayes classification model.

Convert Trained Model

Convert the traditionally trained model to a naive Bayes classification model for incremental learning.

IncrementalMdl = incrementalLearner(TTMdl)

IncrementalMdl = incrementalClassificationNaiveBayes IsWarm: 1 Metrics: [1x2 table] ClassNames: [1 2 3 4 5] ScoreTransform: 'none' DistributionNames: {1x60 cell} DistributionParameters: {5x60 cell} Properties, Methods

IncrementalMdlis anincrementalClassificationNaiveBayesmodel. Because class names are specified inIncrementalMdl.ClassNames, labels encountered during incremental learning must be inIncrementalMdl.ClassNames.

Separately Track Performance Metrics and Fit Model

Perform incremental learning on the rest of the data by using theupdateMetricsandfitfunctions. At each iteration:

Simulate a data stream by processing 50 observations at a time.

Call

updateMetricsto update the cumulative and window minimal cost of the model given the incoming chunk of observations. Overwrite the previous incremental model to update the losses in theMetricsproperty. Note that the function does not fit the model to the chunk of data—the chunk is "new" data for the model. Specify the observation weights.Store the minimal cost.

Call

fitto fit the model to the incoming chunk of observations. Overwrite the previous incremental model to update the model parameters. Specify the observation weights.

% Preallocationidxil = ~idxtt; nil = sum(idxil); numObsPerChunk = 50; nchunk = floor(nil/numObsPerChunk); mc = array2table(zeros(nchunk,2),'VariableNames',["Cumulative""Window"]); Xil = X(idxil,:); Yil = Y(idxil); Wil = W(idxil);% Incremental fittingforj = 1:nchunk ibegin = min(nil,numObsPerChunk*(j-1) + 1); iend = min(nil,numObsPerChunk*j); idx = ibegin:iend; IncrementalMdl = updateMetrics(IncrementalMdl,Xil(idx,:),Yil(idx),...“重量”,Wil(idx)); mc{j,:} = IncrementalMdl.Metrics{"MinimalCost",:}; IncrementalMdl = fit(IncrementalMdl,Xil(idx,:),Yil(idx),“重量”,Wil(idx));end

IncrementalMdlis anincrementalClassificationNaiveBayesmodel object trained on all the data in the stream.

Alternatively, you can useupdateMetricsAndFitto update performance metrics of the model given a new chunk of data, and then fit the model to the data.

Plot a trace plot of the performance metrics.

h = plot(mc.Variables); xlim([0 nchunk]) ylabel('Minimal Cost') legend(h,mc.Properties.VariableNames) xlabel('Iteration')

The cumulative loss gradually stabilizes, whereas the window loss jumps throughout the training.

Perform Conditional Training

Incrementally train a naive Bayes classification model only when its performance degrades.

Load the human activity data set. Randomly shuffle the data.

loadhumanactivityn = numel(actid); rng(1)% For reproducibilityidx = randsample(n,n); X = feat(idx,:); Y = actid(idx);

For details on the data set, enterDescriptionat the command line.

Configure a naive Bayes classification model for incremental learning so that the maximum number of expected classes is 5, the tracked performance metric includes the misclassification error rate, and the metrics window size is 1000. Fit the configured model to the first 1000 observations.

Mdl = incrementalClassificationNaiveBayes('MaxNumClasses',5,'MetricsWindowSize',1000,...'Metrics','classiferror'); initobs = 1000; Mdl = fit(Mdl,X(1:initobs,:),Y(1:initobs));

Mdlis anincrementalClassificationNaiveBayesmodel object.

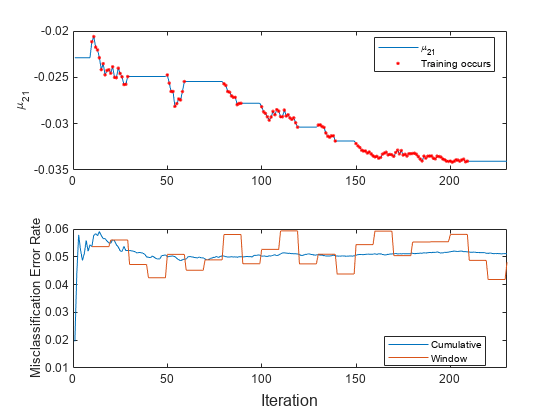

Perform incremental learning, with conditional fitting, by following this procedure for each iteration:

Simulate a data stream by processing a chunk of 100 observations at a time.

Update the model performance on the incoming chunk of data.

Fit the model to the chunk of data only when the misclassification error rate is greater than 0.05.

When tracking performance and fitting, overwrite the previous incremental model.

Store the misclassification error rate and the mean of the first predictor in the second class to see how they evolve during training.

Track when

fittrains the model.

% PreallocationnumObsPerChunk = 100; nchunk = floor((n - initobs)/numObsPerChunk); mu21 = zeros(nchunk,1); ce = array2table(nan(nchunk,2),'VariableNames',["Cumulative""Window"]); trained = false(nchunk,1);% Incremental fittingforj = 1:nchunk ibegin = min(n,numObsPerChunk*(j-1) + 1 + initobs); iend = min(n,numObsPerChunk*j + initobs); idx = ibegin:iend; Mdl = updateMetrics(Mdl,X(idx,:),Y(idx)); ce{j,:} = Mdl.Metrics{"ClassificationError",:};ifce{j,2} > 0.05 Mdl = fit(Mdl,X(idx,:),Y(idx)); trained(j) = true;endmu21(j) = Mdl.DistributionParameters{2,1}(1);end

Mdlis anincrementalClassificationNaiveBayesmodel object trained on all the data in the stream.

To see how the model performance and evolve during training, plot them on separate tiles.

t = tiledlayout(2,1); nexttile plot(mu21) holdonplot(find(trained),mu21(trained),'r.') xlim([0 nchunk]) ylabel('\mu_{21}') legend('\mu_{21}','Training occurs','Location','best') holdoffnexttile plot(ce.Variables) xlim([0 nchunk]) ylabel('Misclassification Error Rate') legend(ce.Properties.VariableNames,'Location','best') xlabel(t,'Iteration')

The trace plot of shows periods of constant values, during which the loss within the previous observation window is at most 0.05.

Input Arguments

Mdl—Naive Bayes classification model for incremental learning

incrementalClassificationNaiveBayesmodel object

Naive Bayes classification model for incremental learning to fit to streaming data, specified as anincrementalClassificationNaiveBayesmodel object. You can createMdldirectly or by converting a supported, traditionally trained machine learning model using theincrementalLearnerfunction. For more details, see the corresponding reference page.

X—Chunk of predictor data

floating-point matrix

Chunk of predictor data to which the model is fit, specified as ann-by-Mdl.NumPredictorsfloating-point matrix.

The length of the observation labelsYand the number of observations inXmust be equal;Y(is the label of observationj(row) inj)X.

Note

IfMdl.NumPredictors= 0,fitinfers the number of predictors fromX, and sets the corresponding property of the output model. Otherwise, if the number of predictor variables in the streaming data changes fromMdl.NumPredictors,fitissues an error.

Data Types:single|double

Y—Chunk of labels

categorical array|character array|string array|logical vector|floating-point vector|cell array of character vectors

Chunk of labels to which the model is fit, specified as a categorical, character, or string array, logical or floating-point vector, or cell array of character vectors.

The length of the observation labelsYand the number of observations inXmust be equal;Y(is the label of observationj(row) inj)X.

fitissues an error when one or both of these conditions are met:

Ycontains a new label and the maximum number of classes has already been reached (see theMaxNumClassesandClassNamesarguments ofincrementalClassificationNaiveBayes).The

ClassNamesproperty of the input modelMdlis nonempty, and the data types ofYandMdl.ClassNamesare different.

Data Types:char|string|cell|categorical|logical|single|double

Weights—Chunk of observation weights

floating-point vector of positive values

Chunk of observation weights, specified as a floating-point vector of positive values.fitweighs the observations inXwith the corresponding values inWeights. The size ofWeightsmust equaln, the number of observations inX.

By default,Weightsisones(.n,1)

For more details, including normalization schemes, seeObservation Weights.

Data Types:double|single

Note

If an observation (predictor or label) or weight contains at least one missing (NaN) value,fitignores the observation. Consequently,fituses fewer thannobservations to create an updated model, wherenis the number of observations inX.

Output Arguments

Mdl— Updated naive Bayes classification model for incremental learning

incrementalClassificationNaiveBayesmodel object

Updated naive Bayes classification model for incremental learning, returned as an incremental learning model object of the same data type as the input modelMdl, anincrementalClassificationNaiveBayesobject.

In addition to updating distribution model parameters,fitperforms the following actions whenYcontains expected, but unprocessed, classes:

If you do not specify all expected classes by using the

ClassNamesname-value argument when you create the input modelMdlusingincrementalClassificationNaiveBayes,fit:Appends any new labels in

Yto the tail ofMdl.ClassNames.Expands

Mdl.Costto ac-by-cmatrix, wherecis the number of classes inMdl.ClassNames. The resulting misclassification cost matrix is balanced.Expands

Mdl.Priorto a lengthcvector of an updated empirical class distribution.

If you specify all expected classes when you create the input model

Mdlor convert a traditionally trained naive Bayes model usingincrementalLearner, but you do not specify a misclassification cost matrix (Mdl.Cost),fitsets misclassification costs of processed classes to1and unprocessed classes toNaN. For example, iffitprocesses the first two classes of a possible three classes,Mdl.Costis[0 1 NaN; 1 0 NaN; 1 1 0].

More About

Bag-of-Tokens Model

In the bag-of-tokens model, the value of predictorjis the nonnegative number of occurrences of tokenjin the observation. The number of categories (bins) in the multinomial model is the number of distinct tokens (number of predictors).

Tips

Unlike traditional training, incremental learning might not have a separate test (holdout) set. Therefore, to treat each incoming chunk of data as a test set, pass the incremental model and each incoming chunk to

updateMetricsbefore training the model on the same data.

Algorithms

Normal Distribution Estimators

If predictor variablejhas a conditional normal distribution (see theDistributionNamesproperty), the software fits the distribution to the data by computing the class-specific weighted mean and the biased (maximum likelihood) estimate of the weighted standard deviation. For each classk:

The weighted mean of predictorjis

wherewiis the weight for observationi. The software normalizes weights within a class such that they sum to the prior probability for that class.

The unbiased estimator of the weighted standard deviation of predictorjis

Estimated Probability for Multinomial Distribution

If all predictor variables compose a conditional multinomial distribution (see theDistributionNamesproperty), the software fits the distribution using theBag-of-Tokens Model. The software stores the probability that tokenjappears in classkin the propertyDistributionParameters{. With additive smoothing[1], the estimated probability isk,j}

where:

which is the weighted number of occurrences of tokenjin classk.

nkis the number of observations in classk.

is the weight for observationi. The software normalizes weights within a class so that they sum to the prior probability for that class.

which is the total weighted number of occurrences of all tokens in classk.

Estimated Probability for Multivariate Multinomial Distribution

If predictor variablejhas a conditional multivariate multinomial distribution (see theDistributionNamesproperty), the software follows this procedure:

The software collects a list of the unique levels, stores the sorted list in

CategoricalLevels, and considers each level a bin. Each combination of predictor and class is a separate, independent multinomial random variable.For each classk, the software counts instances of each categorical level using the list stored in

CategoricalLevels{.j}The software stores the probability that predictor

jin classkhas levelLin the propertyDistributionParameters{, for all levels ink,j}CategoricalLevels{. With additive smoothing[1], the estimated probability isj}where:

观察的加权数w是哪一个hich predictorjequalsLin classk.

nkis the number of observations in classk.

ifxij=L, and 0 otherwise.

is the weight for observationi. The software normalizes weights within a class so that they sum to the prior probability for that class.

mjis the number of distinct levels in predictorj.

mkis the weighted number of observations in classk.

Observation Weights

For each conditional predictor distribution,fitcomputes the weighted average and standard deviation.

If the prior class probability distribution is known (in other words, the prior distribution is not empirical),fitnormalizes observation weights to sum to the prior class probabilities in the respective classes. This action implies that the default observation weights are the respective prior class probabilities.

If the prior class probability distribution is empirical, the software normalizes the specified observation weights to sum to 1 each time you callfit.

References

[1] Manning, Christopher D., Prabhakar Raghavan, and Hinrich Schütze.Introduction to Information Retrieval, NY: Cambridge University Press, 2008.

Version History

Introduced in R2021aR2021b:Naive Bayes incremental fitting functions compute biased (maximum likelihood) standard deviations for conditionally normal predictor variables

Starting in R2021b, naive Bayes incremental fitting functionsfitandupdateMetricsAndFitcompute biased (maximum likelihood) estimates of the weighted standard deviations for conditionally normal predictor variables during training. In other words, for each classk, incremental fitting functions normalize the sum of square weighted deviations of the conditionally normal predictorxjby the sum of the weights in classk. Before R2021b, naive Bayes incremental fitting functions computed the unbiased standard deviation, likefitcnb. The currently returned weighted standard deviation estimates differ from those computed before R2021b by a factor of

The factor approaches 1 as the sample size increases.

Open Example

You have a modified version of this example. Do you want to open this example with your edits?

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select:.

You can also select a web site from the following list:

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina(Español)

- Canada(English)

- United States(English)

Europe

- Belgium(English)

- Denmark(English)

- Deutschland(Deutsch)

- España(Español)

- Finland(English)

- France(Français)

- Ireland(English)

- Italia(Italiano)

- Luxembourg(English)

- Netherlands(English)

- Norway(English)

- Österreich(Deutsch)

- Portugal(English)

- Sweden(English)

- Switzerland

- United Kingdom(English)