Pythagorean Addition

How do you compute the hypotenuse of a right triangle without squaring the lengths of the sides and without taking any square roots?

有限公司ntents

Some Important Operations

These are all important operations.

- 有限公司mpute the 2-norm of a vector, $||v||_2$.

- Find complex magnitude, $|x + iy|$.

- 有限公司nvert from cartestesian to polar coordinates, $x + iy = r e^{i \theta}$

- 有限公司mpute an arctangent, $\theta = \arctan{y/x}$

- Find a plane rotation that zeros one component of a two-vector.

- Find an orthogonal reflection that zeros $n-1$ components of an $n$-vector.

All of them involve computing

$$ \sqrt{x^2 + y^2} $$

in some way or another.

Pythagorean Addition

Let's introduce the notation $\oplus$ for what we callPythagorean addition.

$$ x \oplus y = \sqrt{x^2 + y^2} $$

This has some of the properties of ordinary addition, at least on the nonnegative real numbers.

You can use Pythagorean addition repeatedly to compute the 2-norm of a vector $v$ with components $v_1, v_2, \ldots, v_n$.

$$||v||_2 = v_1 \oplus v_2 \oplus \ldots \oplus v_n$$

It is easy to see how Pythagorean addition is involved in the other operations listed above.

Underflow and Overflow

有限公司mputationally, it is essential to avoid unnecessary overflow and underflow of floating point numbers. IEEE double precision has the following range. Any values outside this range are too small or too large to be represented.

formatcompactformatshorterange = [eps*realmin realmax]

range = 4.9407e-324 1.7977e+308

This crude attempt to implement Pythagorean addition is not satisfactory because the intermediate results underflow or overflow.

bad_pythag = @(x,y) sqrt(x^2 + y^2)

bad_pythag = @(x,y)sqrt(x^2+y^2)

Ifxandyare so small that their squares underflow, thenbad_pythag(x,y)will be zero even though the true result can be represented.

x = 3e-200 y = 4e-200 z = 5e-200% should be the resultz = bad_pythag(x,y)

x = 3.0000e-200 y = 4.0000e-200 z = 5.0000e-200 z = 0

Ifxandyare so large that their squares overflow, thenbad_pythag(x,y)will be infinity even though the true result can be represented.

x = 3e200 y = 4e200 z = 5e200% should be the resultz = bad_pythag(x,y)

x = 3.0000e+200 y = 4.0000e+200 z = 5.0000e+200 z = Inf

Don Morrison

Don Morrison was a mathematician and computer pioneer who spent most of his career at Sandia National Laboratory in Albuquerque. He left Sandia in the late '60s, founded the Computer Science Department at the University of New Mexico, and recruited me to join the university a few years later.

Don had all kinds of fascinating mathematical interests. He was an expert on cryptography. He developed fair voting systems for multi-candidate elections. He designed an on-demand public transportation system for the city of Albuquerque. He constructed a kind of harmonica that played computer punched cards. He discovered the Fast Fourier Transform before Culley and Tuckey, and published the algorithm in the proceedings of a regional ACM conference.

This is the first of two or three blogs that I intend to write about things I learned from Don.

Don's Diagram

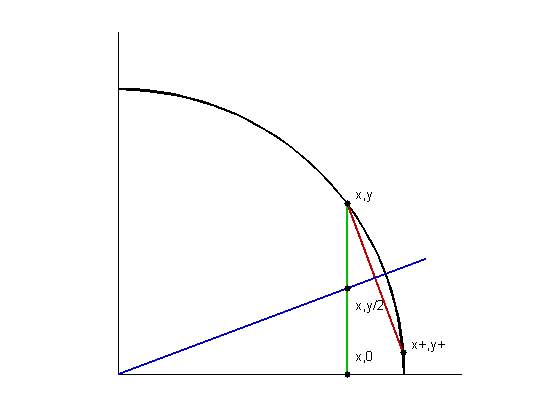

Don was sitting in on a class I was teaching on mathematical software. I was talking about the importance of avoiding underflow and overflow while computing the 2-norm of a vector. (It was particularly important back then because the IBM mainframes of the day had especially limited floating point exponent range.) We tried to do the computation with just one pass over the data, to avoid repeated access to main memory. This involved messy dynamic rescaling. It was also relatively expensive to compute square roots. Before the end of the class Don had sketched something like the following.

pythag_pic(4,3)

我们在(x, y)美元,美元|y| \le |x|$. We want to find the radius of the black circle without squaring $x$ or $y$ and without computing any square roots. The green line leads from point $(x,y)$ to its projection $(x,0)$ on the $x$-axis. The blue line extends from the origin through the midpoint of the green line. The red line is perpendicular to the blue line. The red line intersects the circle in the point $(x+,y+)$. Don realized that $x+$ and $y+$ could be computed from $x$ and $y$ with a few safe rational operations, and that $y+$ would be much smaller than $y$, so that $x+$ would be a much better approximation to the radius than $x$. The process could then be repeated a few times to get an excellent approximation to the desired result.

Function Pythag

Here, in today's MATLAB, is the algorithm. It turns out that the iteration is cubically convergent, so at most three iterations produces double precision accuracy. It is not worth the trouble to check for convergence in fewer than three iterations.

typepythag

function x = pythag(a,b) % PYTHAG Pythagorean addition % pythag(a,b) = sqrt(a^2+b^2) without unnecessary % underflow or overflow and without any square roots. if a==0 && b==0 x = 0; else % Start with abs(x) >= abs(y) x = max(abs(a),abs(b)); y = min(abs(a),abs(b)); % Iterate three times for k = 1:3 r = (y/x)^2; s = r/(4+r); x = x + 2*s*x; y = s*y; end end end

有限公司mputingr = (y/x)^2is safe because the square will not overflow and, if it underflows, it is negligible. There are only half a dozen other floating point operations per iteration and they are all safe.

It is not obvious, but the quantity $x \oplus y$ is a loop invariant.

Surprisingly, this algorithm cannot be used to compute square roots.

Examples

Starting with $x = y$ is the slowest to converge.

formatlongeformatcompactpythag_with_disp(1,1) sqrt(2)

1 1 1.400000000000000e+00 2.000000000000000e-01 1.414213197969543e+00 1.015228426395939e-03 1.414213562373095e+00 1.307981162604408e-10 ans = 1.414213562373095e+00 ans = 1.414213562373095e+00

It's fun to compute Pythagorean triples, which are pairs of integers whose Pythagorean sum is another integer.

pythag_with_disp(4e-300,3e-300)

4.000000000000000e-300 3.000000000000000e-300 4.986301369863013e-300 3.698630136986302e-301 4.999999974188252e-300 5.080526329415360e-304 5.000000000000000e-300 1.311372652398298e-312 ans = 5.000000000000000e-300

pythag_with_disp(12e300,5e300)

1.200000000000000e+301 5.000000000000000e+300 1.299833610648919e+301 2.079866888519135e+299 1.299999999999319e+301 1.331199999999652e+295 1.300000000000000e+301 3.489660928000008e+282 ans = 1.300000000000000e+301

Augustin Dubrulle

Augustin Dubrulle is a French-born numerical analyst who was working for IBM in Houston in the 1970s on SSP, their Scientific Subroutine Package. He is the only person I know of who ever improved on an algorithm of J. H. Wilkinson. Wilkinson and Christian Reinsch had published, inNumerische Mathematik, two versions of the symmetric tridiagonal QR algorithm for matrix eigenvalues. The explicit shift version required fewer operations, but the implicit shift version had better numerical properties. Dubrulle published a half-page paper inNumerische Mathematikthat said, in effect, "make the following change to the inner loop of the algorithm of Wilkinson and Reinsch" to combine the superior properties of both versions. This is the algorithm we use today in MATLAB to compute eigenvalues of symmetric matrices.

Augustin came to New Mexico to get his Ph. D. and became interested in thepythagalgorithm. He analyzed its convergence properties, showed its connection to Halley's method for computing square roots, and produced higher order generalizations. The three of us published two papers back to back,Moler and Morrison, andDubrulle, in theIBM Journal of Research and Developmentin 1983.

Pythag Today?

What has become ofpythag?其功能在生活hypot, which is part oflibm, the fundamental math library support for C. There is ahypotfunction in MATLAB.

On Intel chips, and on chips that use Intel libraries, we rely upon the Intel Math Kernel Library to computehypot. Modern Intel architectures have two features that we did not have in the old days. First, square root is an acceptably fast machine instruction, so this implementation would be OK.

typeok_hypot

function r = ok_hypot(x,y) if x==0 && y==0 r = 0; elseif abs(x) >= abs(y) r = abs(x)*sqrt(1+abs(y/x)^2); else r = abs(y)*sqrt(1+abs(x/y)^2); end end

But even that kind of precaution isn't necessary today because of the other relevant feature of the Intel architecture, the extended floating point registers. These registers are accessible only to library developers working in machine language. They provide increased precision and, more important in this situation, increased exponent range. So, if you start with standard double precision numbers and do the entire computation in the extended registers, you can get away withbad_pythag. In this case, clever hardware obviates the need for clever software.

- Category:

- Algorithms

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Steve on Image Processing with MATLAB

Steve on Image Processing with MATLAB Guy on Simulink

Guy on Simulink Deep Learning

Deep Learning Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB Community

MATLAB Community MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs

有限公司mments

To leave a comment, please clickhereto sign in to your MathWorks Account or create a new one.