在深度学习网络培训中自定义输出

此示例显示了如何定义在深度学习神经网络训练期间在每次迭代中运行的输出函数。如果您通过使用'outputfcn'name-value pair argument oftrainingOptions, 然后火车网在开始训练之前,每次培训迭代后以及培训完成后一次调用这些功能。每次调用输出功能时,火车网passes a structure containing information such as the current iteration number, loss, and accuracy. You can use output functions to display or plot progress information, or to stop training. To stop training early, make your output function returntrue。如果任何输出功能返回true,然后进行培训结束,火车网返回最新网络。

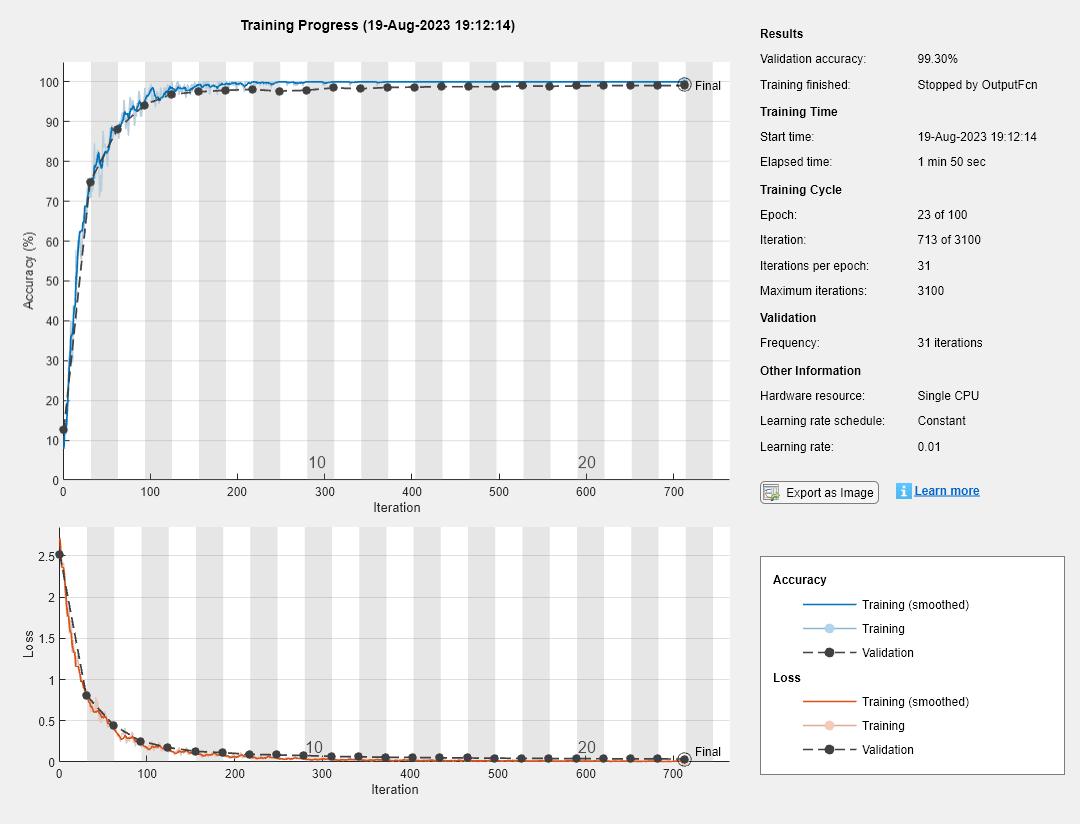

在验证集的损失停止减少时停止培训,只需指定验证数据和验证耐心'ValidationData'和“验证水平”name-value pair arguments oftrainingOptions, 分别。验证耐心是验证集上的损失可能大于或等于在网络训练停止之前最小的损失的次数。您可以使用输出功能添加其他停止条件。此示例显示了如何创建输出功能,该功能在验证数据上的分类准确性停止改进时停止训练。输出功能是在脚本末尾定义的。

Load the training data, which contains 5000 images of digits. Set aside 1000 of the images for network validation.

[Xtrain,Ytrain] = DigitTrain4DarrayData;idx = randperm(size(xtrain,4),1000);xvalidation = xtrain(:,::,:,idx);Xtrain(:,:,:,:,IDX)= [];yvalidation = ytrain(idx);ytrain(idx)= [];

Construct a network to classify the digit image data.

layers = [ imageInputLayer([28 28 1]) convolution2dLayer(3,8,'填充',,,,'same') batchNormalizationLayer reluLayer maxPooling2dLayer(2,“大步”,,,,2) convolution2dLayer(3,16,'填充',,,,'same') batchNormalizationLayer reluLayer maxPooling2dLayer(2,“大步”,2)卷积2Dlayer(3,32,,'填充',,,,'same') batchNormalizationLayer reluLayer fullyConnectedLayer(10) softmaxLayer classificationLayer];

指定网络培训的选项。要在培训期间定期验证网络,请指定验证数据。选择'ValidationFrequency'值,以便每个时期对网络进行一次验证。

在验证集的分类准确性停止改进时停止培训,请指定stopifaccuracynotimproving作为输出功能。第二个输入参数stopifaccuracynotimproving是验证集上的准确性可以小于或等于在网络训练停止之前的准确性或等于先前最高精度的次数。选择最大训练时期数量的任何大价值。训练不应到达最终时期,因为训练会自动停止。

miniBatchSize = 128; validationFrequency = floor(numel(YTrain)/miniBatchSize); options = trainingOptions('sgdm',,,,...“初始删除”,0.01,...“ maxepochs”,100,...“ MINIBATCHSIZE”,minibatchsize,...'VerboseFrequency',,,,validationFrequency,...'ValidationData',,,,{XValidation,YValidation},...'ValidationFrequency',,,,validationFrequency,...“绘图”,,,,“训练过程”,,,,...'outputfcn',@(info)stopifaccuracynotimproving(info,3));

Train the network. Training stops when the validation accuracy stops increasing.

net = trainNetwork(XTrain,YTrain,layers,options);

训练on single CPU. Initializing input data normalization. |======================================================================================================================| | Epoch | Iteration | Time Elapsed | Mini-batch | Validation | Mini-batch | Validation | Base Learning | | | | (hh:mm:ss) | Accuracy | Accuracy | Loss | Loss | Rate | |======================================================================================================================| | 1 | 1 | 00:00:03 | 7.81% | 12.70% | 2.7155 | 2.5169 | 0.0100 | | 1 | 31 | 00:00:07 | 71.88% | 74.90% | 0.8799 | 0.8117 | 0.0100 | | 2 | 62 | 00:00:10 | 86.72% | 87.90% | 0.3859 | 0.4432 | 0.0100 | | 3 | 93 | 00:00:13 | 95.31% | 94.10% | 0.2207 | 0.2535 | 0.0100 | | 4 | 124 | 00:00:16 | 96.09% | 96.60% | 0.1444 | 0.1755 | 0.0100 | | 5 | 155 | 00:00:19 | 99.22% | 97.50% | 0.0989 | 0.1297 | 0.0100 | | 6 | 186 | 00:00:22 | 99.22% | 98.00% | 0.0784 | 0.1134 | 0.0100 | | 7 | 217 | 00:00:26 | 100.00% | 98.30% | 0.0541 | 0.0925 | 0.0100 | | 8 | 248 | 00:00:29 | 100.00% | 97.90% | 0.0422 | 0.0850 | 0.0100 | | 9 | 279 | 00:00:33 | 100.00% | 98.10% | 0.0335 | 0.0776 | 0.0100 | | 10 | 310 | 00:00:36 | 100.00% | 98.50% | 0.0270 | 0.0676 | 0.0100 | | 11 | 341 | 00:00:39 | 100.00% | 98.40% | 0.0233 | 0.0615 | 0.0100 | | 12 | 372 | 00:00:45 | 100.00% | 98.60% | 0.0209 | 0.0564 | 0.0100 | | 13 | 403 | 00:00:49 | 100.00% | 98.80% | 0.0185 | 0.0529 | 0.0100 | | 14 | 434 | 00:00:52 | 100.00% | 98.80% | 0.0162 | 0.0505 | 0.0100 | | 15 | 465 | 00:00:55 | 100.00% | 98.90% | 0.0143 | 0.0482 | 0.0100 | | 16 | 496 | 00:00:59 | 100.00% | 99.00% | 0.0125 | 0.0456 | 0.0100 | | 17 | 527 | 00:01:05 | 100.00% | 99.00% | 0.0111 | 0.0435 | 0.0100 | | 18 | 558 | 00:01:09 | 100.00% | 98.90% | 0.0100 | 0.0415 | 0.0100 | | 19 | 589 | 00:01:12 | 100.00% | 99.00% | 0.0092 | 0.0398 | 0.0100 | |======================================================================================================================| Training finished: Stopped by OutputFcn.

定义输出功能

Define the output functionstopifaccuracynotimproving(info,N),,,,which stops network training if the best classification accuracy on the validation data does not improve forn连续的网络验证。该标准类似于使用验证损失的内置停止标准,除非它适用于分类精度而不是损失。

functionstop = stopIfAccuracyNotImproving(info,N) stop = false;% Keep track of the best validation accuracy and the number of validations for which% there has not been an improvement of the accuracy.执着的最好的伏利执着的瓦拉格培训开始时清除变量。如果info.state =="start"最好的伏利= 0; valLag = 0;Elseif~isempty(info.ValidationLoss)% Compare the current validation accuracy to the best accuracy so far,%并将最佳准确度设定为当前准确性,或提高%的数量验证s for which there has not been an improvement.如果info.ValidationAccuracy> BestValaccuracy vallag = 0;bestValaccuracy = info.validationAccuracy;别的vallag = vallag + 1;结尾% If the validation lag is at least N, that is, the validation accuracy% has not improved for at least N validations, then return true and% stop training.如果vallag> = n stop = true;结尾结尾结尾