Create Simple Sequence Classification Network Using Deep Network Designer

This example shows how to create a simple long short-term memory (LSTM) classification network using Deep Network Designer.

To train a deep neural network to classify sequence data, you can use an LSTM network. An LSTM network is a type of recurrent neural network (RNN) that learns long-term dependencies between time steps of sequence data.

The example demonstrates how to:

Load sequence data.

Construct the network architecture.

Specify training options.

Train the network.

预测新数据的标签并计算分类精度。

Load Data

Load the Japanese Vowels data set, as described in[1]和[2]。预测因子是包含长度序列的细胞阵列,特征维度为12。标签是标签1,2,...,9的分类向量。

[XTrain,YTrain] = japaneseVowelsTrainData; [XValidation,YValidation] = japaneseVowelsTestData;

View the sizes of the first few training sequences. The sequences are matrices with 12 rows (one row for each feature) and a varying number of columns (one column for each time step).

Xtrain(1:5)

ans=5×1单元格数组{12×20 double} {12×26 double} {12×22 double} {12×20 double} {12×21 double}

Define Network Architecture

Open Deep Network Designer.

deepNetworkDesigner

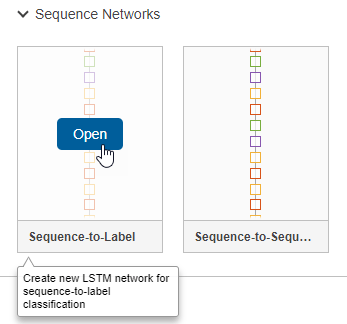

暂停Sequence-to-Label和clickOpen。This opens a prebuilt network suitable for sequence classification problems.

Deep Network Designer显示预制网络。

You can easily adapt this sequence network for the Japanese Vowels data set.

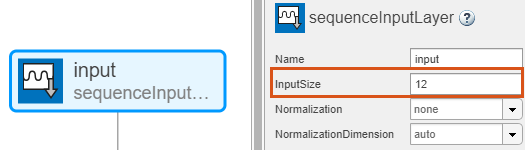

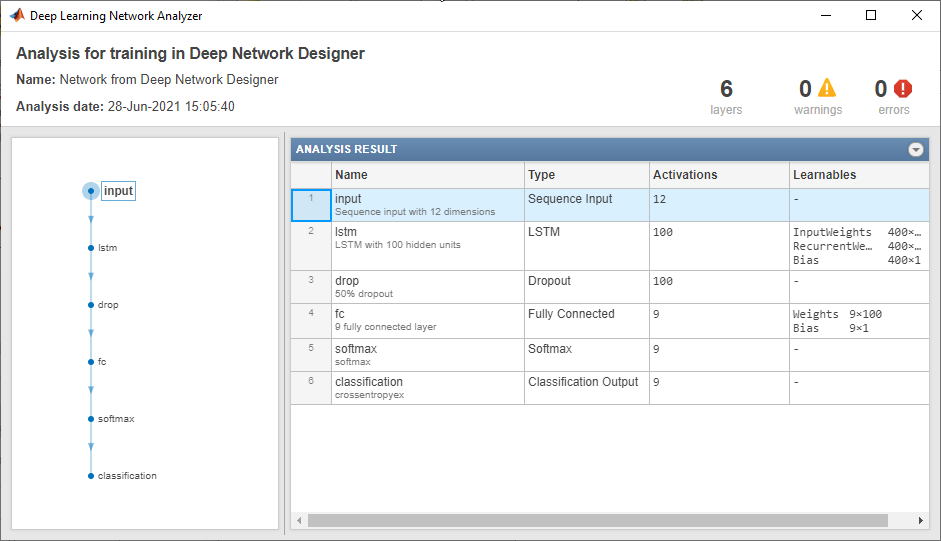

SelectsequenceInputLayer并检查输入设置为12匹配特征维度。

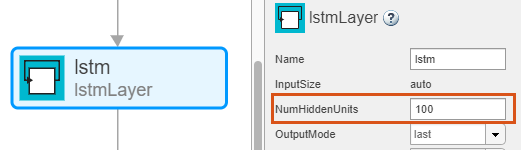

SelectlstmLayer和setNumHiddenUnits到100。

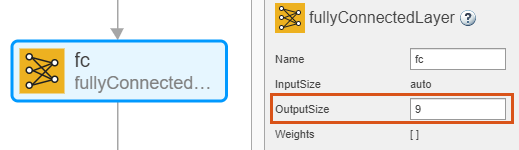

SelectfullyConnectedLayer并检查OutputSize设置为9,类的数量。

Check Network Architecture

To check the network and examine more details of the layers, clickAnalyze。

导出网络体系结构

将网络体系结构导出到工作区,设计师tab, click出口。深网设计师将网络保存为变量layers_1。

You can also generate code to construct the network architecture by selecting出口>生成代码。

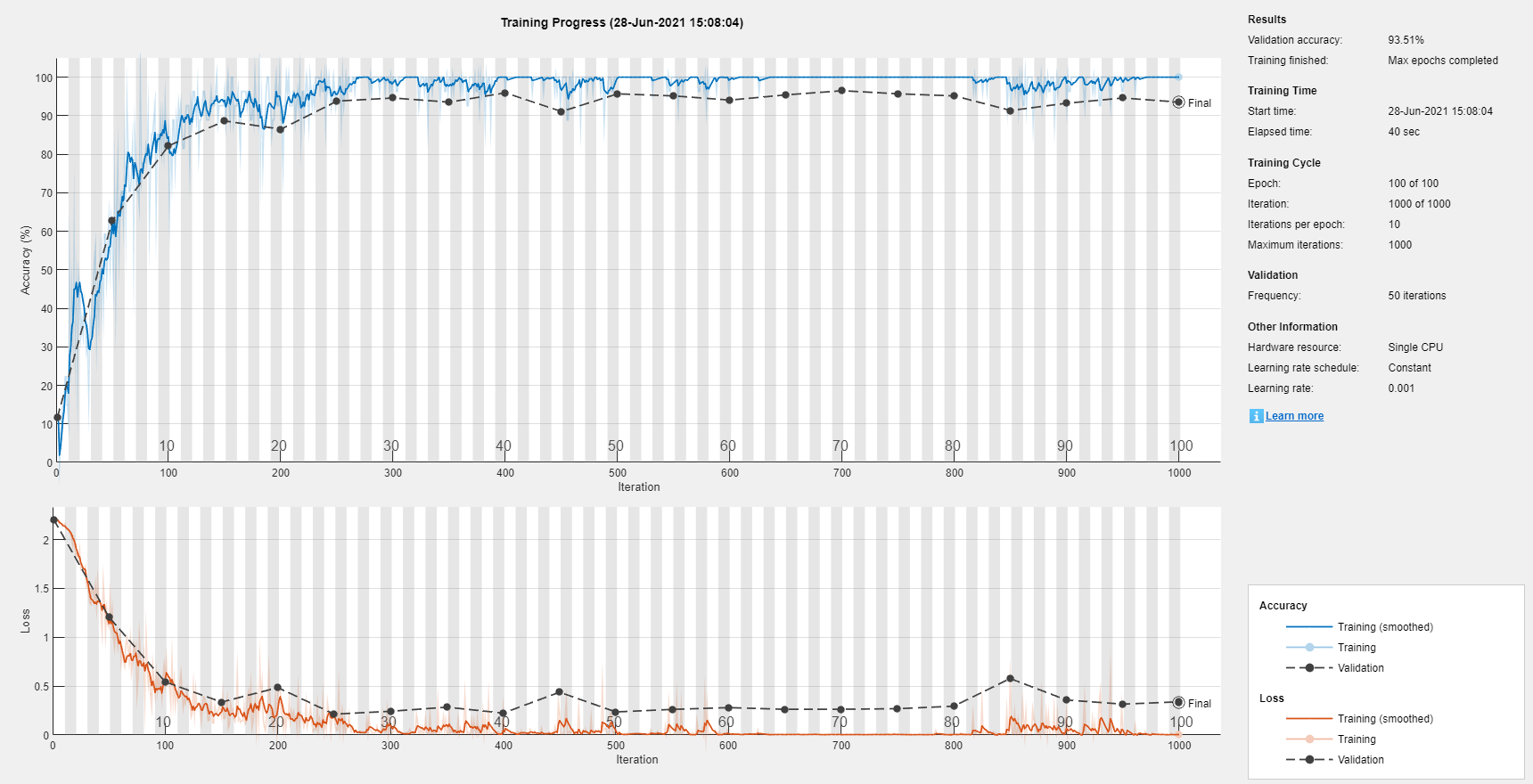

Train Network

Specify the training options and train the network.

Because the mini-batches are small with short sequences, the CPU is better suited for training. Set'ExecutionEnvironment'to'cpu'。To train on a GPU, if available, set'ExecutionEnvironment'to'auto'(the default value).

miniBatchSize = 27; options = trainingOptions('adam',。。。'ExecutionEnvironment','cpu',。。。“MaxEpochs”, 100,。。。'MiniBatchSize',miniBatchSize,。。。'验证data',{xvalidation,yvalidation},。。。'GradientThreshold',2,。。。'Shuffle',“每个段”,。。。'Verbose',错误的,。。。“阴谋”,'training-progress');

Train the network.

net = trainNetwork(XTrain,YTrain,layers_1,options);

您还可以使用深网设计器和数据存储对象训练该网络。有关如何在深网设计师中训练序列到序列回归网络的示例,请参见使用深网设计师的时间序列预测时间序列的火车网络。

测试网络

Classify the test data and calculate the classification accuracy. Specify the same mini-batch size as for training.

YPred = classify(net,XValidation,'MiniBatchSize',miniBatchSize); acc = mean(YPred == YValidation)

acc = 0.9405

For next steps, you can try improving the accuracy by using bidirectional LSTM (BiLSTM) layers or by creating a deeper network. For more information, seeLong Short-Term Memory Networks。

For an example showing how to use convolutional networks to classify sequence data, seeSpeech Command Recognition Using Deep Learning。

参考

[1]Kudo, Mineichi, Jun Toyama, and Masaru Shimbo. “Multidimensional Curve Classification Using Passing-through Regions.” Pattern Recognition Letters 20, no. 11–13 (November 1999): 1103–11. https://doi.org/10.1016/S0167-8655(99)00077-X.

[2] Kudo,Mineichi,Jun Toyama和Masaru Shimbo。日本元音数据集。由UCI机器学习存储库分发。https://archive.ics.uci.edu/ml/datasets/Japanese+Vowels