이 번역 페이지는 최신 내용을 담고 있지 않습니다. 최신 내용을 영문으로 보려면 여기를 클릭하십시오.

차원 축소 및 특징 추출

특징 변환기법은 데이터를 새 특징으로 변환하여 데이터의 차원 수를 줄입니다. 데이터에 categorical형 변수가 있는 경우와 같이 변수 변환이 가능하지 않은 경우특징 선택기법이더더적합。특정특정적으로최소최소제곱피팅에적합한특징선택기법대한자세한내용내용단계적 회귀항목을 참조하십시오.

함수

객체

도움말 항목

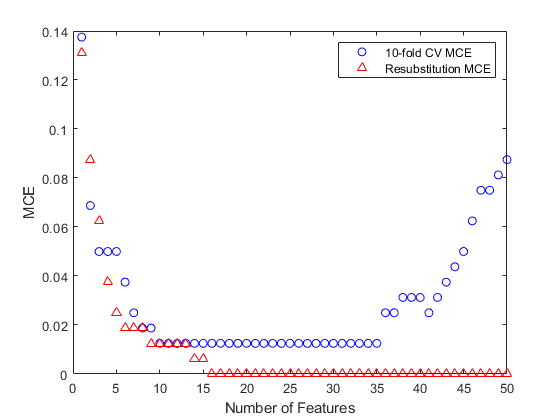

특징 선택

Introduction to Feature Selection

Learn about feature selection algorithms and explore the functions available for feature selection.

This topic introduces to sequential feature selection and provides an example that selects features sequentially using a custom criterion and thesequentialfsfunction.

Neighborhood Component Analysis (NCA) Feature Selection

Neighborhood component analysis (NCA) is a non-parametric method for selecting features with the goal of maximizing prediction accuracy of regression and classification algorithms.

- Robust Feature Selection Using NCA for Regression

- Tune Regularization Parameter to Detect Features Using NCA for Classification

Regularize Discriminant Analysis Classifier

Make a more robust and simpler model by removing predictors without compromising the predictive power of the model.

Select Predictors for Random Forests

Select split-predictors for random forests using interaction test algorithm.

특징 추출

Feature extraction is a set of methods to extract high-level features from data.

这次考试ple shows a complete workflow for feature extraction from image data.

这个例子展示了如何使用rica解决混合音频信号。

t-SNE 다차원 시각화

t-SNE is a method for visualizing high-dimensional data by nonlinear reduction to two or three dimensions, while preserving some features of the original data.

Visualize High-Dimensional Data Using t-SNE

这次考试ple shows how t-SNE creates a useful low-dimensional embedding of high-dimensional data.

这次考试ple shows the effects of varioustsnesettings.

Output function description and example for t-SNE.

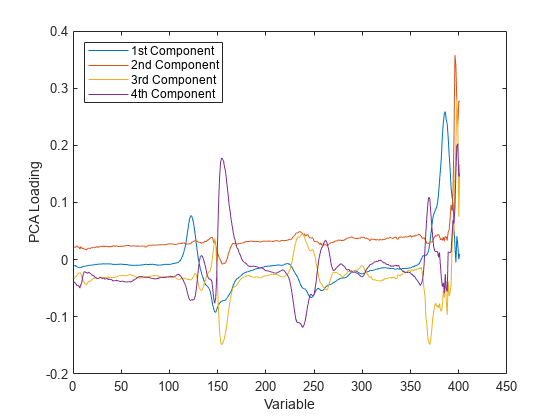

PCA와 정준 상관

주성분 분석은 상관관계가 있는 여러 변수를 원래 변수의 일차 결합인 새로운 변수의 집합으로 교체하여 데이터의 차원 수를 줄입니다.

Analyze Quality of Life in U.S. Cities Using PCA

Perform a weighted principal components analysis and interpret the results.

요인 분석

因子分析is a way to fit a model to multivariate data to estimate interdependence of measured variables on a smaller number of unobserved (latent) factors.

Analyze Stock Prices Using Factor Analysis

Use factor analysis to investigate whether companies within the same sector experience similar week-to-week changes in stock prices.

Perform Factor Analysis on Exam Grades

这次考试ple shows how to perform factor analysis using Statistics and Machine Learning Toolbox™.

음이 아닌 행렬 분해

Nonnegative Matrix Factorization

Nonnegative matrix factorization(NMF) is a dimension-reduction technique based on a low-rank approximation of the feature space.

Perform Nonnegative Matrix Factorization

Perform nonnegative matrix factorization using the multiplicative and alternating least-squares algorithms.

다차원 스케일링

Multidimensional scaling allows you to visualize how near points are to each other for many kinds of distance or dissimilarity metrics and can produce a representation of data in a small number of dimensions.

Classical Multidimensional Scaling

Usecmdscaleto perform classical (metric) multidimensional scaling, also known as principal coordinates analysis.

Classical Multidimensional Scaling Applied to Nonspatial Distances

这次考试ple shows how to perform classical multidimensional scaling using thecmdscalefunction in Statistics and Machine Learning Toolbox™.

Nonclassical Multidimensional Scaling

这次考试ple shows how to visualize dissimilarity data using nonclassical forms of multidimensional scaling (MDS).

Nonclassical and Nonmetric Multidimensional Scaling

Perform nonclassical multidimensional scaling usingmdscale.

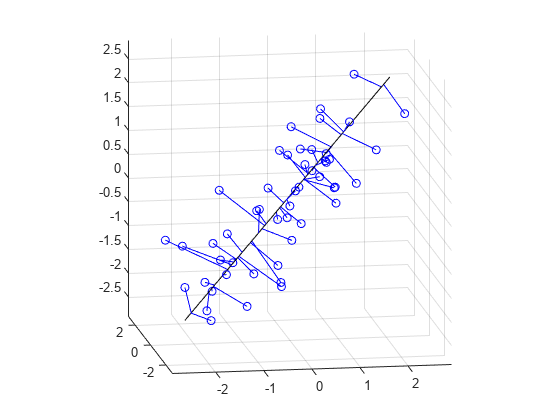

프로크루스테스 분석

Procrustes analysis minimizes the differences in location between compared landmark data using the best shape-preserving Euclidean transformations.

Compare Handwritten Shapes Using Procrustes Analysis

Use Procrustes analysis to compare two handwritten numerals.