trainssdobjectdetector

训练SSD深度学习对象检测器

句法

描述

训练探测器

trainedDetector= TrainsSdobjectDetector(trainingData那LGRAPH.那选项)

此功能要求您有深入学习工具箱™。建议您还有并行计算工具箱™与CUDA一起使用®-NeLabled nvidia.®GPU。有关支持的计算能力的信息,请参阅金宝appGPU支金宝app持情况(并行计算工具箱)。

[每次迭代还返回有关培训进度的信息,例如培训损失和准确性。trainedDetector那信息) = trainSSDObjectDetector (___)

恢复训练探测器

trainedDetector= TrainsSdobjectDetector(trainingData那检查点那选项)

调整一个检测器

trainedDetector= TrainsSdobjectDetector(trainingData那探测器那选项)

额外的属性

trainedDetector= TrainsSdobjectDetector(___那名称,价值)名称,价值对参数和任何先前的输入。

例子

训练SSD对象检测器

加载车辆检测的训练数据进入工作区。

data = load(“vehicleTrainingData.mat”);trainingData = data.vehicleTrainingData;

指定存储培训样本的目录。在培训数据中添加文件名的完整路径。

dataDir = fullfile (toolboxdir ('想象'),'VisionData');trainingdata.imagefilename = fullfile(datadir,trainingdata.imagefilename);

使用表中的文件创建一个图像数据存储。

IMDS = imageageAtastore(trainingData.ImageFilename);

使用表中的标签列创建框标签数据存储。

BLDS = BoxLabeldAtastore(TrainingData(:,2:结束));

结合数据存储。

DS =联合(IMDS,BLD);

加载预先突出的SSD对象检测网络。

net = load('ssdvehicledetectorm.at');lgraph = net.lgraph

Lapraph =具有属性的图层图:图层:[132×1 nnet.cnn.layer.layer]连接:[141×2表] InputNames:{'input_1'} OutputNames:{'focal_loss''rcnnboxregression'}

检查SSD网络中的图层及其属性。您还可以通过遵循所提供的步骤来创建SSD网络创建SSD对象检测网络。

lapraph.Layers.

ANS = 132×1层阵列带图层:1'INPUT_1'图像输入224×224×3图像,具有“ZSCORE”归一化2'CONC1'卷积32 3×3×3卷绕与步幅[2 2]并填充'相同'3'BN_CONV1'批量归一化批量归一化与32通道4'CONV1_RELU'夹释放的Relu Cliple Relu与天花板6 5'扩展_CONV_DEPTHWIES'分组32组1 3×3×1卷绕卷曲[1 1]并填充'同样'6'Expanded_conv_depthWise_bn'批量归一化批量归一化与32通道7'扩展_conv_depthwise_relu'剪切refliprecreu与天花板6 8'扩展_conv_project'卷积16 1×1×32卷积与步幅[11]和填充'相同'9'expanded_conv_project_bn'批量归一化具有16个通道10'Lock_1_14的批量归一化卷积96 1×1×16卷绕与步幅[11]和填充'相同的'11'块_1_expand_bn'批量归一化批量归一化与96通道12'块_ xpand_relu'剪辑recurecreure使用天花板6 13'块_1_1_depthise'分组卷积96组1 3×3×1卷绕卷曲[2 2]和填充'相同的'14'块_1_depthWise_bn'批量标准化与96通道15'块_1_depthWise_relu'剪裁释放recure天花板6 16'Block_1_Project'卷积24 1×1×96卷绕与步幅[11]和填充'相同的'17'块_1_Project_Bn'批量归一化批量归一化批量归一化与24通道18'块_展开的卷积144 1×1×24卷积与步幅的卷积[11]和填充'相同的'19'块_2_expand_bn'批量归一化批量归一化与144通道20'block_2_expand_relu'剪切的Relu剪切的recure,其中带有天花板6 21'块_2_depthwher'分组的卷积144组的1 3×3×1卷绕卷积[1 1 1]和填充'相同的'22'块_2_depthWise_bn'批量归一化批量归一化与144通道23'块_2_depthWise_relu'夹释放的recrure relu与天花板6 24'块_project'卷积241×1×144卷绕卷发[11]和填充'相同'25'块_2_project_bn'批量归一化批量归一化与24通道26'block_2_add'添加元素 - 方面添加2个输入27'块_展开'卷积144 1×1×24卷绕卷曲[11]和填充'相同'28'块_3_expand_bn'批量归一化批量归一化与144通道29'block_3_expand_relu'剪切Relu剪切的reflure relu,带有天花板6 30'block_3_depthwhe'分组卷积144组1 3×3×1的组带有步幅的卷曲[2 2]和填充'相同的'31'块_3_depthWise_bn'批量归一化批量标准化,带有144个通道32'块_3_depthWise_relu'剪裁释放reflure,带有天花板6 33'block_3_project'卷积32 1×1×144卷积[1 1] and padding 'same' 34 'block_3_project_BN' Batch Normalization Batch normalization with 32 channels 35 'block_4_expand' Convolution 192 1×1×32 convolutions with stride [1 1] and padding 'same' 36 'block_4_expand_BN' Batch Normalization Batch normalization with 192 channels 37 'block_4_expand_relu' Clipped ReLU Clipped ReLU with ceiling 6 38 'block_4_depthwise' Grouped Convolution 192 groups of 1 3×3×1 convolutions with stride [1 1] and padding 'same' 39 'block_4_depthwise_BN' Batch Normalization Batch normalization with 192 channels 40 'block_4_depthwise_relu' Clipped ReLU Clipped ReLU with ceiling 6 41 'block_4_project' Convolution 32 1×1×192 convolutions with stride [1 1] and padding 'same' 42 'block_4_project_BN' Batch Normalization Batch normalization with 32 channels 43 'block_4_add' Addition Element-wise addition of 2 inputs 44 'block_5_expand' Convolution 192 1×1×32 convolutions with stride [1 1] and padding 'same' 45 'block_5_expand_BN' Batch Normalization Batch normalization with 192 channels 46 'block_5_expand_relu' Clipped ReLU Clipped ReLU with ceiling 6 47 'block_5_depthwise' Grouped Convolution 192 groups of 1 3×3×1 convolutions with stride [1 1] and padding 'same' 48 'block_5_depthwise_BN' Batch Normalization Batch normalization with 192 channels 49 'block_5_depthwise_relu' Clipped ReLU Clipped ReLU with ceiling 6 50 'block_5_project' Convolution 32 1×1×192 convolutions with stride [1 1] and padding 'same' 51 'block_5_project_BN' Batch Normalization Batch normalization with 32 channels 52 'block_5_add' Addition Element-wise addition of 2 inputs 53 'block_6_expand' Convolution 192 1×1×32 convolutions with stride [1 1] and padding 'same' 54 'block_6_expand_BN' Batch Normalization Batch normalization with 192 channels 55 'block_6_expand_relu' Clipped ReLU Clipped ReLU with ceiling 6 56 'block_6_depthwise' Grouped Convolution 192 groups of 1 3×3×1 convolutions with stride [2 2] and padding 'same' 57 'block_6_depthwise_BN' Batch Normalization Batch normalization with 192 channels 58 'block_6_depthwise_relu' Clipped ReLU Clipped ReLU with ceiling 6 59 'block_6_project' Convolution 64 1×1×192 convolutions with stride [1 1] and padding 'same' 60 'block_6_project_BN' Batch Normalization Batch normalization with 64 channels 61 'block_7_expand' Convolution 384 1×1×64 convolutions with stride [1 1] and padding 'same' 62 'block_7_expand_BN' Batch Normalization Batch normalization with 384 channels 63 'block_7_expand_relu' Clipped ReLU Clipped ReLU with ceiling 6 64 'block_7_depthwise' Grouped Convolution 384 groups of 1 3×3×1 convolutions with stride [1 1] and padding 'same' 65 'block_7_depthwise_BN' Batch Normalization Batch normalization with 384 channels 66 'block_7_depthwise_relu' Clipped ReLU Clipped ReLU with ceiling 6 67 'block_7_project' Convolution 64 1×1×384 convolutions with stride [1 1] and padding 'same' 68 'block_7_project_BN' Batch Normalization Batch normalization with 64 channels 69 'block_7_add' Addition Element-wise addition of 2 inputs 70 'block_8_expand' Convolution 384 1×1×64 convolutions with stride [1 1] and padding 'same' 71 'block_8_expand_BN' Batch Normalization Batch normalization with 384 channels 72 'block_8_expand_relu' Clipped ReLU Clipped ReLU with ceiling 6 73 'block_8_depthwise' Grouped Convolution 384 groups of 1 3×3×1 convolutions with stride [1 1] and padding 'same' 74 'block_8_depthwise_BN' Batch Normalization Batch normalization with 384 channels 75 'block_8_depthwise_relu' Clipped ReLU Clipped ReLU with ceiling 6 76 'block_8_project' Convolution 64 1×1×384 convolutions with stride [1 1] and padding 'same' 77 'block_8_project_BN' Batch Normalization Batch normalization with 64 channels 78 'block_8_add' Addition Element-wise addition of 2 inputs 79 'block_9_expand' Convolution 384 1×1×64 convolutions with stride [1 1] and padding 'same' 80 'block_9_expand_BN' Batch Normalization Batch normalization with 384 channels 81 'block_9_expand_relu' Clipped ReLU Clipped ReLU with ceiling 6 82 'block_9_depthwise' Grouped Convolution 384 groups of 1 3×3×1 convolutions with stride [1 1] and padding 'same' 83 'block_9_depthwise_BN' Batch Normalization Batch normalization with 384 channels 84 'block_9_depthwise_relu' Clipped ReLU Clipped ReLU with ceiling 6 85 'block_9_project' Convolution 64 1×1×384 convolutions with stride [1 1] and padding 'same' 86 'block_9_project_BN' Batch Normalization Batch normalization with 64 channels 87 'block_9_add' Addition Element-wise addition of 2 inputs 88 'block_10_expand' Convolution 384 1×1×64 convolutions with stride [1 1] and padding 'same' 89 'block_10_expand_BN' Batch Normalization Batch normalization with 384 channels 90 'block_10_expand_relu' Clipped ReLU Clipped ReLU with ceiling 6 91 'block_10_depthwise' Grouped Convolution 384 groups of 1 3×3×1 convolutions with stride [1 1] and padding 'same' 92 'block_10_depthwise_BN' Batch Normalization Batch normalization with 384 channels 93 'block_10_depthwise_relu' Clipped ReLU Clipped ReLU with ceiling 6 94 'block_10_project' Convolution 96 1×1×384 convolutions with stride [1 1] and padding 'same' 95 'block_10_project_BN' Batch Normalization Batch normalization with 96 channels 96 'block_11_expand' Convolution 576 1×1×96 convolutions with stride [1 1] and padding 'same' 97 'block_11_expand_BN' Batch Normalization Batch normalization with 576 channels 98 'block_11_expand_relu' Clipped ReLU Clipped ReLU with ceiling 6 99 'block_11_depthwise' Grouped Convolution 576 groups of 1 3×3×1 convolutions with stride [1 1] and padding 'same' 100 'block_11_depthwise_BN' Batch Normalization Batch normalization with 576 channels 101 'block_11_depthwise_relu' Clipped ReLU Clipped ReLU with ceiling 6 102 'block_11_project' Convolution 96 1×1×576 convolutions with stride [1 1] and padding 'same' 103 'block_11_project_BN' Batch Normalization Batch normalization with 96 channels 104 'block_11_add' Addition Element-wise addition of 2 inputs 105 'block_12_expand' Convolution 576 1×1×96 convolutions with stride [1 1] and padding 'same' 106 'block_12_expand_BN' Batch Normalization Batch normalization with 576 channels 107 'block_12_expand_relu' Clipped ReLU Clipped ReLU with ceiling 6 108 'block_12_depthwise' Grouped Convolution 576 groups of 1 3×3×1 convolutions with stride [1 1] and padding 'same' 109 'block_12_depthwise_BN' Batch Normalization Batch normalization with 576 channels 110 'block_12_depthwise_relu' Clipped ReLU Clipped ReLU with ceiling 6 111 'block_12_project' Convolution 96 1×1×576 convolutions with stride [1 1] and padding 'same' 112 'block_12_project_BN' Batch Normalization Batch normalization with 96 channels 113 'block_12_add' Addition Element-wise addition of 2 inputs 114 'block_13_expand' Convolution 576 1×1×96 convolutions with stride [1 1] and padding 'same' 115 'block_13_expand_BN' Batch Normalization Batch normalization with 576 channels 116 'block_13_expand_relu' Clipped ReLU Clipped ReLU with ceiling 6 117 'block_13_depthwise' Grouped Convolution 576 groups of 1 3×3×1 convolutions with stride [2 2] and padding 'same' 118 'block_13_depthwise_BN' Batch Normalization Batch normalization with 576 channels 119 'block_13_depthwise_relu' Clipped ReLU Clipped ReLU with ceiling 6 120 'block_13_project' Convolution 160 1×1×576 convolutions with stride [1 1] and padding 'same' 121 'block_13_project_BN' Batch Normalization Batch normalization with 160 channels 122 'block_13_project_BN_anchorbox1' Anchor Box Layer. Anchor Box Layer. 123 'block_13_project_BN_mbox_conf_1' Convolution 10 3×3 convolutions with stride [1 1] and padding [1 1 1 1] 124 'block_13_project_BN_mbox_loc_1' Convolution 20 3×3 convolutions with stride [1 1] and padding [1 1 1 1] 125 'block_10_project_BN_anchorbox2' Anchor Box Layer. Anchor Box Layer. 126 'block_10_project_BN_mbox_conf_1' Convolution 10 3×3 convolutions with stride [1 1] and padding [1 1 1 1] 127 'block_10_project_BN_mbox_loc_1' Convolution 20 3×3 convolutions with stride [1 1] and padding [1 1 1 1] 128 'confmerge' SSD Merge Layer. SSD Merge Layer. 129 'locmerge' SSD Merge Layer. SSD Merge Layer. 130 'anchorBoxSoft' Softmax softmax 131 'focal_loss' Focal Loss Layer. Focal Loss Layer. 132 'rcnnboxRegression' Box Regression Output smooth-l1 loss

配置网络培训选项。

选择= trainingOptions ('sgdm'那......'italllearnrate',5e-5,......'minibatchsize'16,......'verbose',真的,......'maxepochs', 50岁,......'洗牌'那'每个时代'那......“VerboseFrequency”10,......“CheckpointPath”,Tempdir);

培训SSD网络。

[探测器,信息] = TRAINESDOBJECTDETECTOR(DS,LGROPP,选项);

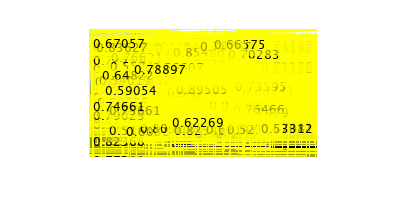

*************************************************************************训练an SSD Object Detector for the following object classes: * vehicle Training on single CPU. Initializing input data normalization. |=======================================================================================================| | Epoch | Iteration | Time Elapsed | Mini-batch | Mini-batch | Mini-batch | Base Learning | | | | (hh:mm:ss) | Loss | Accuracy | RMSE | Rate | |=======================================================================================================| | 1 | 1 | 00:00:18 | 0.8757 | 48.51% | 1.47 | 5.0000e-05 | | 1 | 10 | 00:01:43 | 0.8386 | 48.35% | 1.43 | 5.0000e-05 | | 2 | 20 | 00:03:15 | 0.7860 | 48.87% | 1.37 | 5.0000e-05 | | 2 | 30 | 00:04:42 | 0.6771 | 48.65% | 1.23 | 5.0000e-05 | | 3 | 40 | 00:06:46 | 0.7129 | 48.43% | 1.28 | 5.0000e-05 | | 3 | 50 | 00:08:37 | 0.5723 | 49.04% | 1.09 | 5.0000e-05 | | 4 | 60 | 00:10:12 | 0.5632 | 48.72% | 1.08 | 5.0000e-05 | | 4 | 70 | 00:11:25 | 0.5438 | 49.11% | 1.06 | 5.0000e-05 | | 5 | 80 | 00:12:35 | 0.5277 | 48.48% | 1.03 | 5.0000e-05 | | 5 | 90 | 00:13:39 | 0.4711 | 48.95% | 0.96 | 5.0000e-05 | | 6 | 100 | 00:14:50 | 0.5063 | 48.72% | 1.00 | 5.0000e-05 | | 7 | 110 | 00:16:11 | 0.4812 | 48.99% | 0.97 | 5.0000e-05 | | 7 | 120 | 00:17:27 | 0.5248 | 48.53% | 1.04 | 5.0000e-05 | | 8 | 130 | 00:18:33 | 0.4245 | 49.32% | 0.90 | 5.0000e-05 | | 8 | 140 | 00:19:37 | 0.4889 | 48.87% | 0.98 | 5.0000e-05 | | 9 | 150 | 00:20:47 | 0.4213 | 49.18% | 0.89 | 5.0000e-05 | | 9 | 160 | 00:22:02 | 0.4753 | 49.45% | 0.97 | 5.0000e-05 | | 10 | 170 | 00:23:13 | 0.4454 | 49.31% | 0.92 | 5.0000e-05 | | 10 | 180 | 00:24:22 | 0.4378 | 49.26% | 0.92 | 5.0000e-05 | | 11 | 190 | 00:25:29 | 0.4278 | 49.13% | 0.90 | 5.0000e-05 | | 12 | 200 | 00:26:39 | 0.4494 | 49.77% | 0.93 | 5.0000e-05 | | 12 | 210 | 00:27:45 | 0.4298 | 49.03% | 0.90 | 5.0000e-05 | | 13 | 220 | 00:28:47 | 0.4296 | 49.86% | 0.90 | 5.0000e-05 | | 13 | 230 | 00:30:05 | 0.3987 | 49.65% | 0.86 | 5.0000e-05 | | 14 | 240 | 00:31:13 | 0.4042 | 49.46% | 0.87 | 5.0000e-05 | | 14 | 250 | 00:32:20 | 0.4244 | 50.16% | 0.90 | 5.0000e-05 | | 15 | 260 | 00:33:31 | 0.4374 | 49.72% | 0.93 | 5.0000e-05 | | 15 | 270 | 00:34:38 | 0.4016 | 48.95% | 0.86 | 5.0000e-05 | | 16 | 280 | 00:35:47 | 0.4289 | 49.44% | 0.91 | 5.0000e-05 | | 17 | 290 | 00:36:58 | 0.3866 | 49.10% | 0.84 | 5.0000e-05 | | 17 | 300 | 00:38:10 | 0.4077 | 49.59% | 0.87 | 5.0000e-05 | | 18 | 310 | 00:39:24 | 0.3943 | 49.74% | 0.86 | 5.0000e-05 | | 18 | 320 | 00:40:48 | 0.4206 | 49.99% | 0.89 | 5.0000e-05 | | 19 | 330 | 00:41:53 | 0.4504 | 49.72% | 0.94 | 5.0000e-05 | | 19 | 340 | 00:42:55 | 0.3449 | 50.38% | 0.78 | 5.0000e-05 | | 20 | 350 | 00:44:01 | 0.3450 | 49.57% | 0.77 | 5.0000e-05 | | 20 | 360 | 00:44:59 | 0.3769 | 50.24% | 0.83 | 5.0000e-05 | | 21 | 370 | 00:46:05 | 0.3336 | 50.40% | 0.76 | 5.0000e-05 | | 22 | 380 | 00:47:01 | 0.3453 | 49.27% | 0.78 | 5.0000e-05 | | 22 | 390 | 00:48:04 | 0.4011 | 49.72% | 0.87 | 5.0000e-05 | | 23 | 400 | 00:49:06 | 0.3307 | 50.32% | 0.75 | 5.0000e-05 | | 23 | 410 | 00:50:03 | 0.3186 | 50.01% | 0.73 | 5.0000e-05 | | 24 | 420 | 00:51:10 | 0.3491 | 50.43% | 0.78 | 5.0000e-05 | | 24 | 430 | 00:52:17 | 0.3299 | 50.31% | 0.76 | 5.0000e-05 | | 25 | 440 | 00:53:35 | 0.3326 | 50.78% | 0.76 | 5.0000e-05 | | 25 | 450 | 00:54:42 | 0.3219 | 50.61% | 0.75 | 5.0000e-05 | | 26 | 460 | 00:55:55 | 0.3090 | 50.59% | 0.71 | 5.0000e-05 | | 27 | 470 | 00:57:08 | 0.3036 | 51.48% | 0.71 | 5.0000e-05 | | 27 | 480 | 00:58:16 | 0.3359 | 50.43% | 0.76 | 5.0000e-05 | | 28 | 490 | 00:59:24 | 0.3182 | 50.35% | 0.73 | 5.0000e-05 | | 28 | 500 | 01:00:36 | 0.3265 | 50.71% | 0.76 | 5.0000e-05 | | 29 | 510 | 01:01:44 | 0.3415 | 50.53% | 0.78 | 5.0000e-05 | | 29 | 520 | 01:02:51 | 0.3126 | 51.15% | 0.73 | 5.0000e-05 | | 30 | 530 | 01:03:59 | 0.3179 | 50.74% | 0.75 | 5.0000e-05 | | 30 | 540 | 01:05:15 | 0.3032 | 50.83% | 0.72 | 5.0000e-05 | | 31 | 550 | 01:06:25 | 0.2868 | 50.69% | 0.68 | 5.0000e-05 | | 32 | 560 | 01:07:42 | 0.2716 | 50.85% | 0.66 | 5.0000e-05 | | 32 | 570 | 01:08:53 | 0.3016 | 51.32% | 0.71 | 5.0000e-05 | | 33 | 580 | 01:10:05 | 0.2624 | 51.35% | 0.63 | 5.0000e-05 | | 33 | 590 | 01:11:12 | 0.3145 | 51.38% | 0.73 | 5.0000e-05 | | 34 | 600 | 01:12:31 | 0.2949 | 51.28% | 0.70 | 5.0000e-05 | | 34 | 610 | 01:13:46 | 0.3070 | 51.22% | 0.73 | 5.0000e-05 | | 35 | 620 | 01:15:01 | 0.3119 | 51.49% | 0.73 | 5.0000e-05 | | 35 | 630 | 01:16:14 | 0.2869 | 51.81% | 0.70 | 5.0000e-05 | | 36 | 640 | 01:17:28 | 0.3401 | 51.28% | 0.78 | 5.0000e-05 | | 37 | 650 | 01:18:40 | 0.3123 | 51.43% | 0.73 | 5.0000e-05 | | 37 | 660 | 01:19:58 | 0.2954 | 51.27% | 0.71 | 5.0000e-05 | | 38 | 670 | 01:21:12 | 0.2792 | 52.17% | 0.68 | 5.0000e-05 | | 38 | 680 | 01:22:29 | 0.3225 | 51.36% | 0.76 | 5.0000e-05 | | 39 | 690 | 01:23:41 | 0.2867 | 52.63% | 0.69 | 5.0000e-05 | | 39 | 700 | 01:24:56 | 0.3067 | 51.52% | 0.73 | 5.0000e-05 | | 40 | 710 | 01:26:13 | 0.2718 | 51.84% | 0.66 | 5.0000e-05 | | 40 | 720 | 01:27:25 | 0.2888 | 52.03% | 0.70 | 5.0000e-05 | | 41 | 730 | 01:28:42 | 0.2854 | 51.96% | 0.69 | 5.0000e-05 | | 42 | 740 | 01:29:57 | 0.2744 | 51.18% | 0.67 | 5.0000e-05 | | 42 | 750 | 01:31:10 | 0.2582 | 51.90% | 0.64 | 5.0000e-05 | | 43 | 760 | 01:32:25 | 0.2586 | 52.48% | 0.64 | 5.0000e-05 | | 43 | 770 | 01:33:35 | 0.2632 | 51.47% | 0.65 | 5.0000e-05 | | 44 | 780 | 01:34:46 | 0.2532 | 51.58% | 0.63 | 5.0000e-05 | | 44 | 790 | 01:36:07 | 0.2889 | 52.19% | 0.69 | 5.0000e-05 | | 45 | 800 | 01:37:20 | 0.2551 | 52.35% | 0.63 | 5.0000e-05 | | 45 | 810 | 01:38:27 | 0.2863 | 51.29% | 0.69 | 5.0000e-05 | | 46 | 820 | 01:39:43 | 0.2700 | 52.58% | 0.67 | 5.0000e-05 | | 47 | 830 | 01:40:54 | 0.3234 | 51.96% | 0.76 | 5.0000e-05 | | 47 | 840 | 01:42:08 | 0.2819 | 52.88% | 0.69 | 5.0000e-05 | | 48 | 850 | 01:43:23 | 0.2743 | 52.80% | 0.67 | 5.0000e-05 | | 48 | 860 | 01:44:38 | 0.2365 | 52.21% | 0.60 | 5.0000e-05 | | 49 | 870 | 01:45:58 | 0.2271 | 52.23% | 0.58 | 5.0000e-05 | | 49 | 880 | 01:47:21 | 0.3006 | 52.23% | 0.72 | 5.0000e-05 | | 50 | 890 | 01:48:35 | 0.2494 | 52.32% | 0.63 | 5.0000e-05 | | 50 | 900 | 01:49:55 | 0.2383 | 53.51% | 0.61 | 5.0000e-05 | |=======================================================================================================| Detector training complete. *************************************************************************

检查探测器的属性。

探测器

Detector =具有属性的SSDObjectDetector:ModelName:'车辆网络:[1×1 dagnetwork] ClassNames:{'车辆'背景'}锚箱:{[5×2双] [5×2双]}

您可以通过检查每个迭代的训练损失来验证训练的准确性。

图绘图(Info.TrainingLoss)网格在包含(的迭代次数) ylabel ('每次迭代的训练损失')

在测试图像上测试SSD检测器。

img = imread ('ssdtestdetect.png');

在图像上运行SSD对象检测器以进行车辆检测。

[bboxes,分数]=检测(探测器,img);

显示检测结果。

如果(〜isempty(bboxes))img = InsertObjectAnnotation(IMG,“矩形”,bboxes,得分);结尾图imshow(img)

输入参数

trainingData-标记地面真像

数据存储

标记为地面真实图像,指定为数据存储或表。

如果使用数据存储,则必须设置数据,以便将数据存储调用

读和读物函数返回具有两列或三列的单元格数组或表。当输出包含两列时,第一列必须包含边界框,第二列必须包含标签,{盒子那标签}。当输出包含三列时,第二列必须包含边界框,第三列必须包含标签。在这种情况下,第一列可以包含任何类型的数据。例如,第一列可以包含图像或点云数据。数据 盒子 标签 第一列可以包含数据,例如点云数据或图像。 第二列必须是包含的单元数组m形式的包围盒的- × 5矩阵[X中央那y中央那宽度那高度那偏航].向量表示每个图像中对象的边界框的位置和大小。 第三列必须是包含m-by-1包含对象类名的分类向量。数据存储返回的所有分类数据必须包含相同的类别。 有关更多信息,请参阅深入学习的数据购物(深度学习工具箱)。

LGRAPH.-层图

分层图目的

层图,指定为分层图对象。图层图包含SSD MultiBox网络的体系结构。您可以使用使用的创建此网络ssdlayers.函数或创建自定义网络。有关更多信息,请参阅SSD Multibox检测入门。

探测器-先前训练SSD对象探测器

ssdobjectdetector目的

以前训练的SSD对象检测器,指定为assdobjectdetector对象。使用此语法继续培训具有额外培训数据的探测器或执行更多培训迭代以提高探测器精度。

选项-培训选择

TrainingOptionsSGDM.目的|TrainingOptionsRMSProp.目的|TrainingOptionsAdam.目的

培训选项,指定为aTrainingOptionsSGDM.那TrainingOptionsRMSProp., 要么TrainingOptionsAdam.由此返回的对象trainingOptions(深度学习工具箱)函数。要指定用于网络训练的求解器名称和其他选项,请使用trainingOptions(深度学习工具箱)函数。

笔记

TrainsSdObjectDetector功能不支持这些培训选项:金宝app

设置时不支持数据存储输入金宝app

DisparctinBackground.培训选项真正的。

检查点-保存的探测器检查点

ssdobjectdetector目的

保存检测器检查点,指定为ssdobjectdetector对象。要将检测器保存在每个epoch之后,请设置“CheckpointPath”的名称-值参数trainingOptions函数。建议在每个epoch之后保存一个检查点,因为网络训练可能需要几个小时。

要加载先前培训的检测器的检查点,请从检查点路径加载MAT文件。例如,如果检查点路径由此指定的对象的属性选项是' / checkpath ',您可以使用此代码加载CheckPoint Mat文件。

data = load('/checkpath/sd_checkpoint__216__2118_11_16__13_34_30.mat');checkpoint = data.detector;

MAT文件的名称包括探测器检查点时的迭代号和时间戳。探测器保存在探测器文件的变量。将此文件传递回TrainsDobjectDetector功能:

ssddetector = trainssdobjectdetector(trainingdata,checkpoint,选项);

输出参数

trainedDetector- 培训的SSD MultiBox对象检测器

ssdobjectdetector目的

训练过的SSD对象检测器,返回为ssdobjectdetector对象。您可以培训SSD对象检测器来检测多个对象类。

信息-培训进度信息

结构数组

训练进度信息,作为包含8个字段的结构数组返回。每个领域对应于一个训练阶段。

TrainingLoss—每次迭代的训练损失为定位误差、置信度损失和分类损失之和计算的均方误差(mean squared error, MSE)。有关训练损失函数的更多信息,请参见训练损失。训练造成的训练-每次迭代的训练集精度。TrainingRMSE-训练均方根误差(RMSE)是由每次迭代的训练损失计算得到的RMSE。基准- 每次迭代的学习率。验证录-每次迭代的验证丢失。验证成数- 每次迭代的验证精度。验证rmse.-每次迭代验证RMSE。FinalDvalidationLoss.- 培训结束时的最终验证亏损。FinalValidationRMSE- 培训结束时的最终验证RMSE。

每个字段是一个数字向量,每个训练迭代包含一个元素。在特定迭代中未计算的值被赋值为南。结构包含验证录那验证成数那验证rmse.那FinalDvalidationLoss.,FinalValidationRMSE仅当田间的时候选项指定验证数据。

参考

W. Liu, E. angelov, D. Erhan, C. Szegedy, S. Reed, C. fu, A.C. Berg。“SSD: Single Shot MultiBox Detector”。欧洲计算机视觉会议(ECCV),Springer Verlag,2016

也可以看看

应用

功能

对象

matlab命令

您单击了与此MATLAB命令对应的链接:

在MATLAB命令窗口中输入它来运行命令。Web浏览器不支持MATLAB命令。金宝app

你也可以从以下列表中选择一个网站:

如何获得最佳网站性能

选择中国网站(以中文或英文)以获取最佳网站性能。其他MathWorks国家网站未优化您的位置。